GPT-4.5 Sucks And That's Okay

Why OpenAI's new model will disappoint a lot of its users—and how that might change over time

OpenAI just unveiled GPT-4.5, positioning it as their "largest and best model for chat yet" with scaled-up pre-training and post-training capabilities. While the release is already generating significant buzz, a closer examination reveals a model that may not justify its premium price tag for many users—yet still represents an important stepping stone toward truly revolutionary AI capabilities.

The Reality Behind the Hype

GPT-4.5 arrives with substantial promises: broader knowledge, improved user intent understanding, reduced hallucinations, and greater "emotional intelligence." OpenAI markets it as a significant advancement in unsupervised learning that makes interactions feel more natural. However, when we examine the data provided so far against the model’s considerable cost of $75/$150 per 1M tokens, one can not help but feel underwhelmed.

But before we dive into the model’s shortcomings, let’s talk about a few of its highlights first. Because they do exist:

Hallucination reduction: 19% hallucination rate on PersonQA (vs. 52% for GPT-4o and 20% for o1)

Multilingual performance: Consistent improvements across 14 languages on the MMLU benchmark

Persuasion capabilities: Highest success rate at receiving payments in the MakeMePay evaluation (57%)

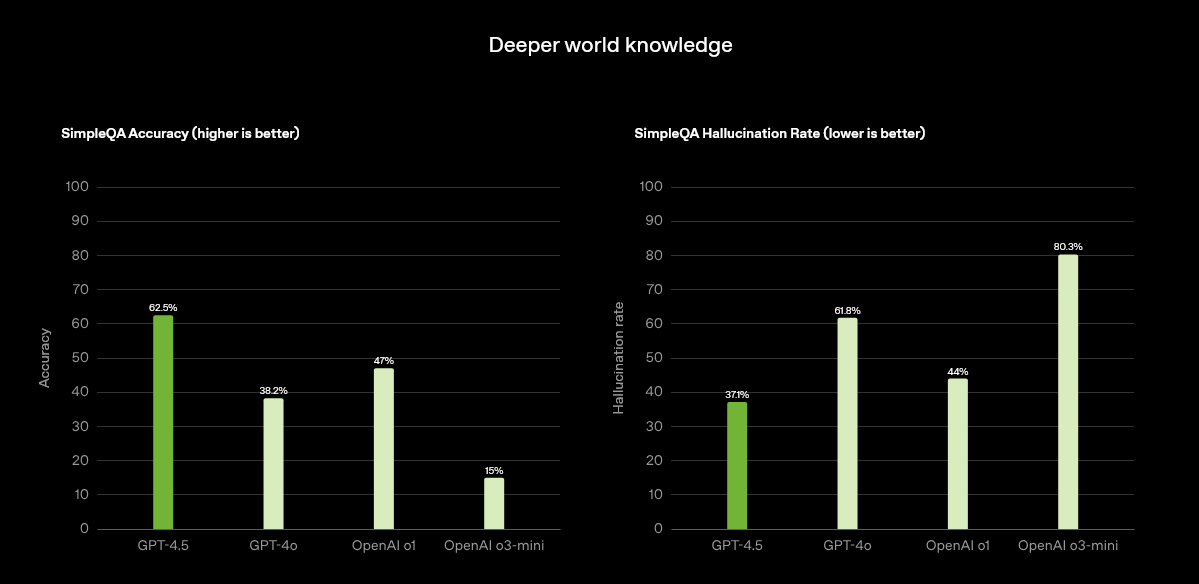

If we’d only look at the benchmarks they provided in their announcement, we might think that GPT-4.5 is quite potent. Take the chart below, for example, where the model easily leaves o3-mini in the dust.

OpenAI has picked their examples very carefully, it seems.

The Reality Behind the Hype

GPT-4.5 arrives with substantial promises: broader knowledge, improved user intent understanding, reduced hallucinations, and greater "emotional intelligence." OpenAI touts it as a significant advancement in unsupervised learning that makes interactions feel more natural However, when we examine the data provided so far against the model’s considerable cost of $75/$150 per 1M tokens, one can not help but feel underwhelmed.

Incremental Rather Than Revolutionary Improvements

Despite being marketed as their "most advanced model”, GPT-4.5 represents more of an iterative improvement than a paradigm shift. This much was to be expected, however, since it was known already that the model would operate within the same fundamental architectural constraints as its predecessors. OpenAI explicitly acknowledges that GPT-4.5 "doesn't think before it responds", which means it lacks the deliberate reasoning capabilities that other models have been exhibiting recently.

Prohibitive Pricing Structure

The most glaring issue with GPT-4.5 is its cost. It’s "a very large and compute-intensive model, making it more expensive than and not a replacement for GPT-4o." This positions GPT-4.5 as a premium product that may be inaccessible to many developers and organizations with limited budgets. For businesses carefully calculating their AI costs, the marginal benefits might not justify the price increase compared to more cost-effective alternatives like Gemini-2, DeepSeek-R1, or even GPT-4o.

Lack of Features

Despite its flagship status and premium price, GPT-4.5 notably lacks support for several advanced features. OpenAI admits the model does not currently support multimodal features like Voice Mode, video, and screensharing in ChatGPT . This creates an awkward situation where users are paying more for a model that, in some respects, does less than existing offerings.

Why GPT-4.5 Still Matters

Despite these legitimate criticisms, the development and release of GPT-4.5 plays a crucial role in the advancement of AI capabilities. Here's why its existence—even with its limitations—is important for the field:

OpenAI itself frames GPT-4.5 as advancing one of "two complementary paradigms: unsupervised learning and reasoning." And Seeing what they have achieved with their o-series (o1 & o3), there’s reason to believe them. If a robust foundation is what they were going for, the focus on unsupervised learning would make sense—while also explaining the not necessarily overwhelming performance on downstream tasks.

By developing two complementary approaches in parallel, OpenAI is trying to build the foundation for future systems that will eventually combine both strengths. As they note: "We believe reasoning will be a core capability of future models, and that the two approaches to scaling—pre-training and reasoning—will complement each other." If this is something they came up with after realizing that GPT-4.5 would turn out the way it did, or if that was actually their plan all along—only they can tell. So let’s believe them, until further evidence is provided, and continue following their argument in good faith.

The Necessary Data Foundation for Tool Use

One of the most promising frontiers in AI development certainly is the creation of systems that can effectively use tools and interact with the outside world. This capability requires both a rich understanding of the world (where GPT-4.5 excels) and the ability to plan and reason through complex processes (where reasoning models excel). GPT-4.5's extensive pre-training on massive datasets creates a knowledge foundation that future reasoning-focused models can leverage.

Another important point is that GPT-4.5 serves as a testing ground for alignment techniques. OpenAI notes that they've implemented "new techniques for supervision that are combined with traditional supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) methods" with this release. These alignment experiments with increasingly powerful models are essential for developing the safety protocols that will be required for truly transformative AI systems. Each iteration provides valuable data on how to make models that are not just capable, but also aligned with human values and intent.

The Real Value Proposition

The true significance of GPT-4.5 might not be in its immediate practical applications but rather in what it represents for the field's progress. For organizations considering whether to invest in GPT-4.5, the decision should depend on specific use cases. OpenAI suggests, applications that benefit from "higher emotional intelligence and creativity—such as writing help, communication, learning, coaching, and brainstorming", which I can neither confirm or deny yet. But with how expensive their API is, I can hardly see GPT-4.5 performing that much better than Claude-3.7 to justify its use over the latter. I’m sure people will find edge-cases though, so I would definitely advise everyone to at least give GPT-4.5 a try or two (depending on the volume of your use case, of course).

Looking Ahead: The Convergence of Paradigms

As discussed earlier above, OpenAI’s win condition basically is the convergence of their two parallel approaches—scaled unsupervised learning and sophisticated reasoning. Their strategy signals a belief that the future of AI lies not in choosing between these paradigms but in combining them. Future models will likely build upon the extensive “world knowledge” of systems like GPT-4.5 while incorporating the deliberate reasoning capabilities of models like o3. This convergence promises to yield AI systems that can both draw from vast knowledge and think through complex problems step-by-step—a combination that would represent a truly transformative advance.

Conclusion

GPT-4.5 may be underwhelming relative to its price for many practical applications today. It represents an incremental advancement in unsupervised learning at a premium cost, with limitations that may not justify the expense for many users. However, this apparent shortcoming doesn't diminish its importance in the bigger picture of AI development.

By scaling unsupervised learning while simultaneously advancing reasoning capabilities through other models, GPT-4.5 serves as a crucial building block for the more transformative AI systems of tomorrow. For those disappointed by GPT-4.5's value proposition, patience may be warranted. The real breakthrough will likely come when these complementary approaches finally converge, combining world knowledge with sophisticated reasoning abilities.

That future may not be as distant as it seems.

👍 If you enjoyed this article, give it a like and share it with your peers.

For further reading, here’s my previous article about Phi-4-Mini, Microsoft’s newest model release—which is the opposite of GPT-4.5: small & efficient.

Microsoft's Phi-4-Mini: Never Has Small Been This Good

Creating efficient, powerful language models has been a significant challenge in AI research. Despite the headline-grabbing capabilities of massive LLMs with hundreds of billions of parameters, there's growing interest in developing smaller, more efficient models that maintain impressive capabilities while running on less hardware. Microsoft's latest en…

The only way this matters is because it should update the market that OpenAI has officially lost its technological advantage. The wall was real in the end.

With Claude Sonnet 3.7 as cheap as it is, there’s really no question here on which frontier model to use via API IMO