Microsoft's Phi-4-Mini: Never Has Small Been This Good

Everything you need to know about their latest AI advancements

Creating efficient, powerful language models has been a significant challenge in AI research. Despite the headline-grabbing capabilities of massive LLMs with hundreds of billions of parameters, there's growing interest in developing smaller, more efficient models that maintain impressive capabilities while running on less hardware. Microsoft's latest entry in this space, Phi-4, represents a notable achievement in this direction. In this article, we'll dive deep into the technical innovations that make Phi-4 stand out—even among its fierce competition.

The Phi Series: A Family of Compact Yet Powerful Models

Microsoft's Phi series, and more specifically, its recently released fourth generation, challenge the conventional wisdom that bigger is always better in language model development. These models demonstrate that with careful data curation, architectural optimizations, and strategic training approaches, even relatively small models can achieve impressive performance across language, vision, and speech tasks.

The newest Phi-4 release consists of two main models:

Phi-4-Mini: A 3.8-billion-parameter language model focused on text understanding and generation

Phi-4-Multimodal: An extension that integrates vision and speech/audio capabilities while preserving the core language abilities

What makes these models particularly interesting is their efficiency-to-performance ratio. Phi-4-Mini matches or exceeds models twice its size on certain tasks, especially in mathematics and coding, while Phi-4-Multimodal delivers competitive multimodal capabilities despite its modest parameter count.

Model Architecture: Technical Foundations

Language Model Architecture

At its core, Phi-4-Mini employs a decoder-only Transformer architecture with several optimizations:

32 Transformer layers with hidden state size of 3,072

Tied input/output embeddings to reduce memory consumption

Expanded vocabulary of 200,064 tokens (up from Phi-3.5) to better support multilingual applications

Group Query Attention (GQA) with 24 query heads and 8 key/value heads, reducing KV cache usage to one-third of standard size

LongRoPE positional encoding to support 128K context length

Fractional RoPE dimension ensuring 25% of attention head dimensions remain position-agnostic for smoother long-context handling

The improved tokenizer deserves special mention as it enables better multilingual and multimodal processing. The expanded vocabulary provides more efficient token representation across languages, which is essential for applications beyond English.

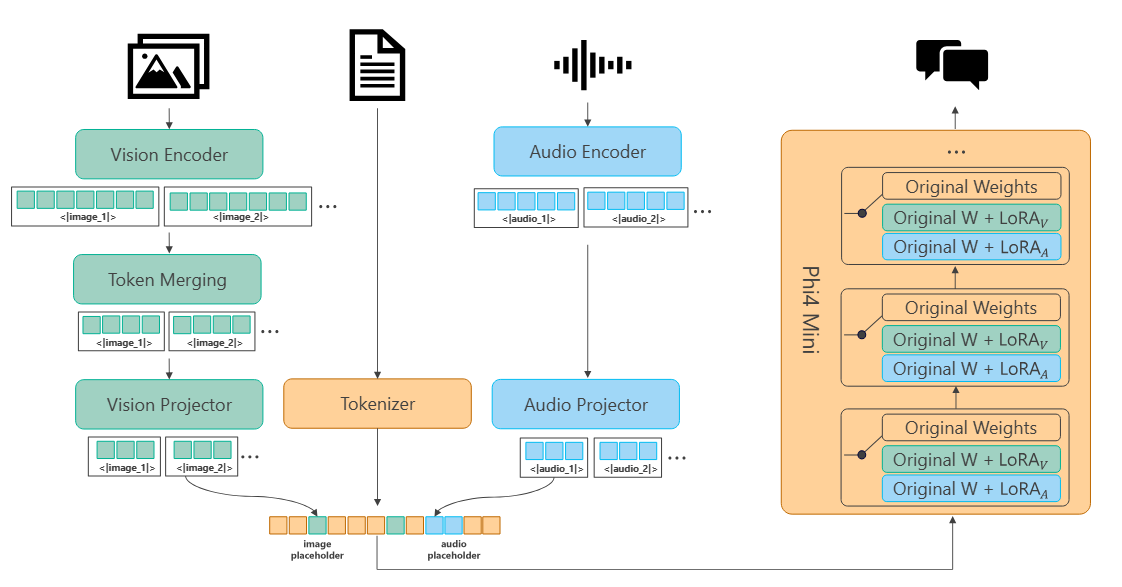

Multimodal Architecture: Mixture of LoRAs

Perhaps the most innovative aspect of Phi-4-Multimodal is its "Mixture of LoRAs" approach for handling multiple modalities. This technique preserves the original language model capabilities while extending functionality to vision and speech:

Vision Modality:

Image encoder based on SigLIP-400M (440M parameters)

Vision projector to align vision features with text embeddings

Vision adapter LoRA (370M parameters) for the language decoder

Speech/Audio Modality:

Audio encoder with conformer blocks (460M parameters)

Audio projector to map speech features to text embedding space

Audio adapter LoRA (460M parameters)

The key trick here is that the base language model remains entirely frozen during multimodal training, with only the modality-specific components being updated. This prevents the interference and performance degradation that often occurs when fine-tuning language models for multimodal tasks.

Training Methodology: The Secret Sauce

The impressive performance of Phi-4-Mini doesn't come from architectural innovations alone—careful data curation and training strategies play a crucial role.