💪 Why Size Doesn't Matter to Your LLMs

It's the quality of the data that matters

In this issue:

David vs. Goliath in terms of training data

Researchers in attack mode

Giving Zero-shot Relation Extraction another try

Want to support me going professional as a content creator? Pledge now for future additional content. Your pledge will help me plan ahead and improve my content.

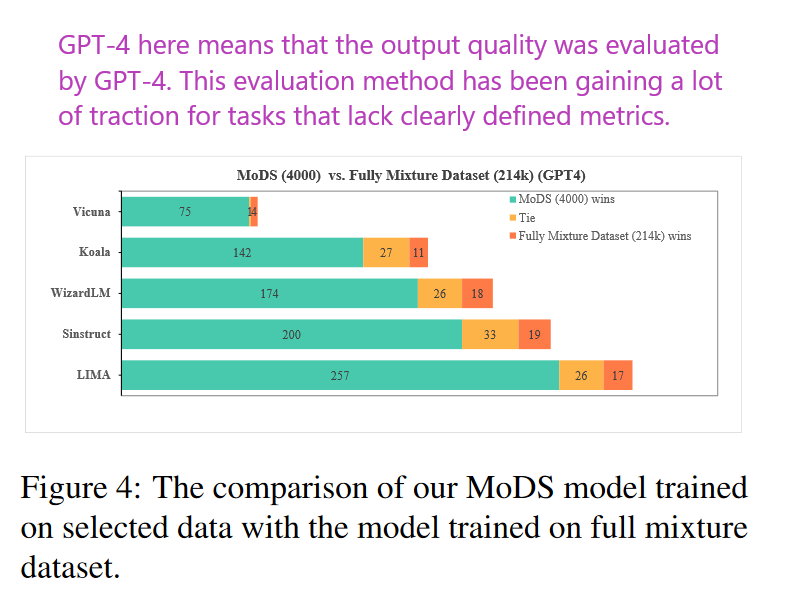

1. MoDS: Model-oriented Data Selection for Instruction Tuning

What problem does it solve? Selecting high-quality and effective instructional data is critical when fine-tuning LLMs such as GPT-3, as using massive amounts of data can be inefficient and unnecessary. The current challenge is identifying which instructional examples are most useful to achieve a high level of performance no matter the LLM's size. Essentially, researchers are trying to optimize the instruction-tuning process to be more cost-effective and time-efficient by narrowing down the required dataset without sacrificing — and potentially even enhancing — the LLM's ability to follow instructions.

How does it solve the problem? The proposed MoDS approach hinges on discerning the quality, coverage, and necessity of instructional data. By evaluating the quality of available instructions with a specialized model, it filters out the most reliable examples. With an algorithmic approach, it then selects a subset that guarantees broad coverage of various scenarios. Finally, by identifying the instructions that the LLM performs poorly on, these are earmarked as most necessary for further improving the model. Through this meticulous and recursive selection process, MoDS distills a compact yet highly effective instruction dataset for fine-tuning.

Key results:

Performance Improvement with Fewer Instructions: The paper demonstrates that their MoDS approach, which uses only 1,000 selected instruction data, outperforms a model trained on the entire Alpaca dataset containing ~52,000 instructions

Comparison with Self-guided Selection: When compared with the self-guided approach proposed by Li et al. (2023a), MoDS shows comparable performance using only half the data (2000 vs. 1000)

Effectiveness of K-center Greedy Algorithm: The ablation study reveals that models fine-tuned with instruction data selected by the K-center greedy algorithm significantly surpass models fine-tuned with instruction data selected via a random sampling approach

2. Scalable Extraction of Training Data from (Production) Language Models

Watching: Extractable Memorization (paper)

What problem does it solve? Extractable memorization in the context of machine learning refers to the ability of adversaries to retrieve portions of training data from a model by querying it. The paper tackles the pressing issue of how open-source, semi-open, and closed models, including popular language models, are susceptible to attack by adversaries who can then extract substantial amounts of sensitive training data without any previous knowledge of it. This vulnerability not only poses privacy concerns but also questions the effectiveness of current alignment techniques in preventing such data leakage.

How does it solve the problem? To address the problem of extractable memorization, the study demonstrates that standard adversarial techniques are adequate to exploit the vulnerability of unaligned models. However, for more sophisticated aligned models like ChatGPT, the researchers developed an advanced ‘divergence attack’, which intentionally prompts the model to deviate from its usual outputs and reveal training data at much higher rates. This innovative attack method marks a significant advancement in understanding model vulnerabilities and showcases how attackers can force even aligned models to compromise their data integrity.

What’s next? The findings from this paper underscore a critical need for the development of new and improved alignment techniques and security measures. Since current methods seem to fall short in safeguarding against memorization exploits, future research will need to focus on creating robust mechanisms to protect models against such attacks. This could entail designing better training protocols, exploring differential privacy in trainings, or inventing novel defenses. As the stakes of model security and user privacy heighten, this research direction will become increasingly vital in the field of machine learning and NLP.

3. Revisiting Large Language Models as Zero-shot Relation Extractors

Watching: SumAsk (paper)

What problem does it solve? The task of relation extraction (RE) in natural language processing typically requires labeled data. Studies have highlighted that large language models (LLMs) like ChatGPT can adeptly transition to new tasks using only a natural language prompt, without the need for additional training data. This work notably explores the idea of using LLMs for zero-shot relation extraction, aiming to address the limitations of existing RE approaches and the potential for drawing connections from text without conventional data and parameter tuning efforts.

How does it solve the problem? To enhance zero-shot RE, the study introduces a novel prompting approach named SumAsk, which employs LLMs to iteratively transform RE inputs into a question-answering format. This method seems to leverage the natural language understanding capabilities of LLMs, incorporating sophisticated prompt techniques such as the chain-of-thought (CoT), which guides models through a logical reasoning process. The SumAsk prompting aids the LLMs in better interpreting the task, allowing for improved performance in extracting relations pureply via prompting without the need for extra data.

Key results:

Relation Classification Improvements: The proposed SumAsk prompting approach improved performance in zero-shot relation classification tasks. For instance, on the TACRED dataset, SumAsk achieved a micro-F1 score of 79.6%, outperforming the second best zero-shot method, NLI-DeBERTa, which scored a micro-F1 of 73.9%

Robustness to Number of Unseen Relations: SumAsk demonstrated less performance degradation as the number of unseen relations increased than comparable methods

Overlapping Relation Extraction: For the task of extracting overlapping relations from the NYT dataset, SumAsk prompted models realized a high F1-score of 65.1% across sentences with various overlapping patterns. In comparison, a baseline method like T0 scored a lower F1-score of 46.8%

Papers of the Week:

ChatGPT's One-year Anniversary: Are Open-Source Large Language Models Catching up?

How to Prompt LLMs for Text-to-SQL: A Study in Zero-shot, Single-domain, and Cross-domain Settings

FreeAL: Towards Human-Free Active Learning in the Era of Large Language Models

Deficiency of Large Language Models in Finance: An Empirical Examination of Hallucination

DocPedia: Unleashing the Power of Large Multimodal Model in the Frequency

UFIN: Universal Feature Interaction Network for Multi-Domain Click-Through Rate Prediction