🚀 What Next-Gen RAG Is About

And getting better insights from graphs

In this issue:

Dual-system RAG with photographic memory

LLMs coming up with better ideas than humans

Taking LLM Graph Learning to the next level

Tired of conferences that feel like sales venues? MLOps World is all about real-life experiences of actual practitioners.

It’s an event for top experts from all over the world to share their practical knowledge and hard-earned lessons.

If you want to join them on November 7-8 at the beautiful Renaissance Austin Hotel, you can get a 15% discount by clicking the link below.

1. MemoRAG: Moving towards Next-Gen RAG Via Memory-Inspired Knowledge Discovery

Watching: MemoRAG (paper/code)

What problem does it solve? Retrieval-Augmented Generation (RAG) has shown great promise in enhancing the performance of large language models (LLMs) by providing them with access to external knowledge bases. However, current RAG systems are limited in their ability to handle complex tasks that involve ambiguous information needs or unstructured knowledge. They excel primarily in straightforward question-answering scenarios where the queries and knowledge are well-defined.

How does it solve the problem? MemoRAG introduces a novel dual-system architecture to address the limitations of existing RAG systems. It employs a lightweight but long-context LLM to create a global memory of the database. When presented with a task, this LLM generates draft answers, which serve as clues for the retrieval tools to locate relevant information within the database. Additionally, MemoRAG utilizes a more expensive but expressive LLM to generate the final answer based on the retrieved information. This dual-system approach allows MemoRAG to handle complex tasks that conventional RAG struggles with, while still maintaining strong performance on straightforward tasks.

What's next? Moving forward, researchers can explore further optimizations to the MemoRAG framework, such as improving the efficiency of the retrieval process and investigating alternative architectures for the long-context LLM. This isn’t the first time someone is claiming to have released “RAG 2.0” and actually evaluating MemoRAG for real-world applications will be crucial.

2. Can LLMs Generate Novel Research Ideas? A Large-Scale Human Study with 100+ NLP Researchers

Watching: AI Researcher (paper/code)

What problem does it solve? As Large Language Models (LLMs) continue to improve, there is growing interest in their potential to accelerate scientific discovery by autonomously generating and validating novel research ideas. However, despite the optimism, there has been a lack of rigorous evaluations to determine whether LLMs can actually produce expert-level ideas that are both novel and feasible. This study aims to address this gap by conducting the first head-to-head comparison between expert NLP researchers and an LLM ideation agent in a controlled experimental setting.

How does it solve the problem? The researchers recruited over 100 NLP experts to write novel research ideas and provide blind reviews of both LLM-generated and human-generated ideas. By comparing the novelty and feasibility ratings of the ideas, they were able to draw statistically significant conclusions about the current capabilities of LLMs in research ideation. The results showed that LLM-generated ideas were judged as more novel than human expert ideas (p < 0.05), while being rated slightly lower on feasibility. This study provides valuable insights into the strengths and limitations of LLMs in generating research ideas and identifies open problems in building and evaluating research agents.

What's next? While this study provides important findings, the researchers acknowledge that human judgments of novelty can be challenging, even for experts. To address this, they propose an end-to-end study design that involves recruiting researchers to execute the generated ideas into full projects. This approach would enable a more comprehensive evaluation of whether the novelty and feasibility judgments translate into meaningful differences in research outcomes. As LLMs continue to advance, it will be crucial to conduct further studies that assess their potential to accelerate scientific discovery and identify areas for improvement in research agent development.

Bonus: If you want to dive deeper into this one, I published a detailed overview here.

3. GraphInsight: Unlocking Insights in Large Language Models for Graph Structure Understanding

Watching: GraphInsight (paper)

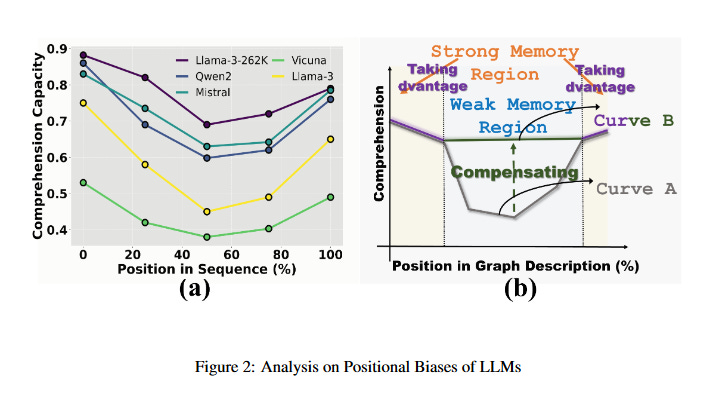

What problem does it solve? Large Language Models (LLMs) have shown impressive capabilities in various natural language processing tasks, including processing and understanding graphs. However, their performance in comprehending graphical structure information through prompts of graph description sequences deteriorates as the graph size increases. This limitation is attributed to the uneven memory performance of LLMs across different positions in the graph description sequences, known as "positional biases."

How does it solve the problem? GraphInsight addresses the positional biases in LLMs' memory performance by employing two key strategies. First, it strategically places critical graphical information in positions where LLMs exhibit stronger memory performance. This ensures that the most important information is more likely to be retained and understood by the model. Second, GraphInsight introduces a lightweight external knowledge base for regions with weaker memory performance, drawing inspiration from retrieval-augmented generation (RAG) techniques. This external knowledge base supplements the LLM's understanding of the graph structure in areas where its memory performance is suboptimal.

What's next? The GraphInsight framework opens up new possibilities for LLMs to effectively process and reason about complex graphical structures. Future research could explore further optimizations and extensions of the GraphInsight framework, such as incorporating more advanced retrieval mechanisms or adapting it to specific domains with unique graph structures. Additionally, the insights gained from GraphInsight could inspire the development of more efficient and effective graph representation techniques for LLMs, ultimately enhancing their ability to understand and reason about graphical information in various applications.

Papers of the Week:

Elsevier Arena: Human Evaluation of Chemistry/Biology/Health Foundational Large Language Models

SciAgents: Automating scientific discovery through multi-agent intelligent graph reasoning

Evidence from fMRI Supports a Two-Phase Abstraction Process in Language Models

Are Large Language Models a Threat to Programming Platforms? An Exploratory Study

OneGen: Efficient One-Pass Unified Generation and Retrieval for LLMs

Insights from Benchmarking Frontier Language Models on Web App Code Generation