⌛ Time for LLMs?

Unlocking temporality in Large Language Models

In this issue:

It’s about time to think about temporality

Fusing models to create a supermodel

LLMs for Link Prediction via Natural Language

Want to market your brand? I’ve been personally using passionfroot for months now and as much as 40% of my partnerships can be accounted to their platform. They make it easy for companies to find fitting creators for their brand and I’ve found their streamlined collaboration process to be more efficient and more enjoyable for both sides.

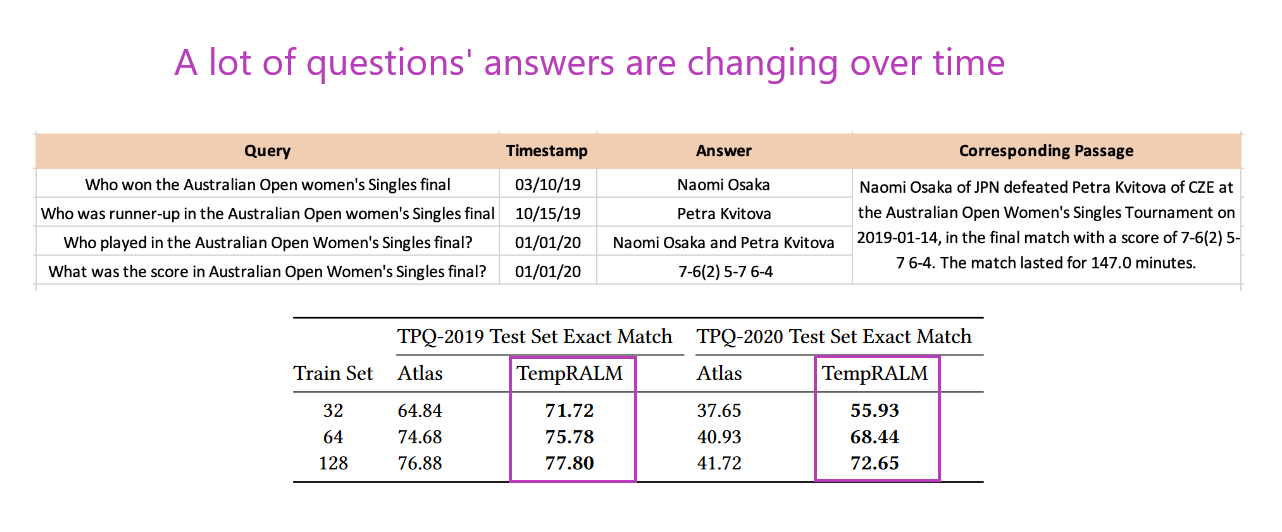

1. It's About Time: Incorporating Temporality in Retrieval Augmented Language Models

Watching: TempRALM (paper)

What problem does it solve? The issue at hand is that when a user is searching for information, they not only require relevant and accurate content but also need it to be temporally specific. This challenge becomes more pronounced with the existence of various versions of content covering the same topic but from different time points. Large Language Models (LLMs) and even Retriever Augmented Language Models (RALMs), which are designed to lower the incidence of LLM hallucination by incorporating a document database, still struggle with pinpointing the temporal context of information. Standard RALMs might fetch information regarding an event like Wimbledon, but fail to discern the most recent occurrences of the event.

How does it solve the problem? The solution proposed in the paper is TempRALM—a temporally-aware Retriever Augmented Language Model that elevates the search not only by considering semantic relevance but also by filtering content based on temporal proximity to answer queries. Unlike standard RALMs, TempRALM uses a few-shot learning extension which doesn't involve expensive pre-training, recalibration, or index replacement processes. This model effectively discerns the temporal dimension of the information, which is crucial for queries highly sensitive to the time aspect, thereby enhancing the accuracy and relevance of responses.

What’s next? Looking forward, the TempRALM represents a significant leap in improving the way we interact with knowledge, addressing a complex, yet vital aspect of information retrieval—temporality. Ensuring that these models maintain updated and time-relevant data without costly overheads will be an intriguing space to watch, potentially revolutionizing real-time information searches via LLMs.

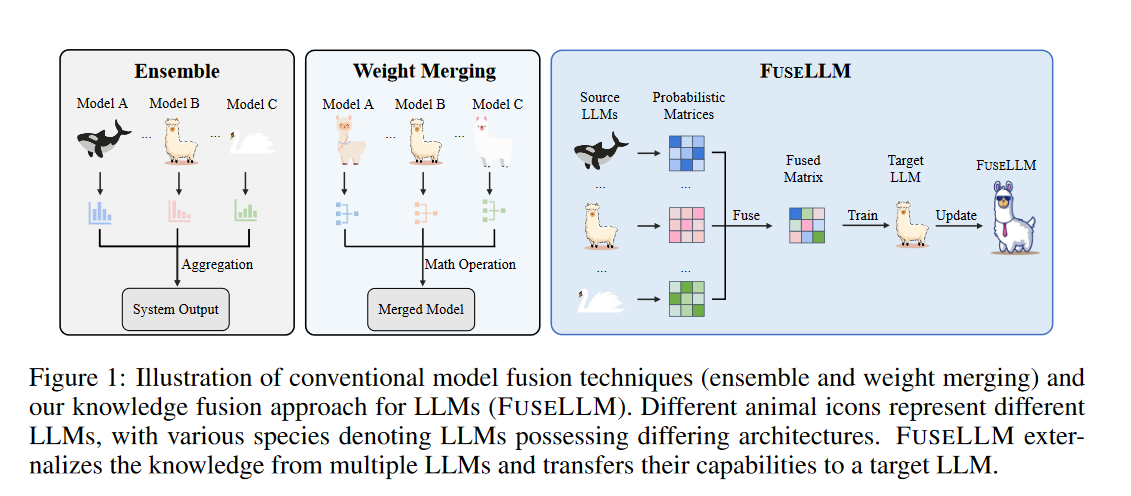

2. Knowledge Fusion of Large Language Models

Watching: Knowledge Fusion (paper/code)

What problem does it solve? Large language models (LLMs) trained from scratch are powerful but come with high computational costs and can occasionally lead to overlapping skills among different models. There's a need for a more economical method that capitalizes on the existing strengths of various pre-trained LLMs without the necessity to train a new model from the ground up. Given the diversity in architectures across LLMs, fusing their abilities directly via weight blending is unfeasible. A strategy to merge the knowledge of different LLMs could harness combined capabilities and efficiencies, but this concept has yet to be fully realized or implemented efficiently.

How does it solve the problem? The proposed concept, known as knowledge fusion for LLMs, addresses this by essentially compiling the generative knowledge from multiple source LLMs into a new, singular LLM. This involves tapping into the generative distributions that each source LLM has learned and transferring this information into one LLM. By doing so, the target LLM may exhibit improved performance that extends beyond what any individual source LLM is capable of. This approach is not a straightforward weight blend but is more akin to a knowledge transfer process that incorporates the unique strengths of each parent model into a new super LLM.

What’s next? From here, it's likely we'll see this knowledge fusion approach get refined and potentially become a staple for generating high-functioning LLMs more sustainably. Following this, we should expect to see broader applications of fused models in various fields where LLMs are applicable, such as automated reasoning, content generation, and programming assistance. The researchers have made their code and models publicly available, which should encourage replication, critique, and hopefully, further development of this fusion technique.

3. Scalable Link Prediction on Large-Scale Heterogeneous Graphs with Large Language Models

Watching: LPNL (paper)

What problem does it solve? While large-scale language models excel in understanding and generating natural language text, their application in graph learning, especially in tasks like link prediction, is not straightforward. The main obstacles are the sheer volume of data within large graphs and the complexity of such networks, which are often comprised of various types of nodes and relationships. Traditional methods may struggle to efficiently process and predict connections in these heterogeneous, large-scale graphs.

How does it solve the problem? The proposed LPNL framework addresses these challenges by creatively intermingling natural language processing with graph analytics. By using natural language prompts to describe graph details, LPNL translates graph elements into a language that LLMs can process. Furthermore, the framework is designed with a two-stage sampling pipeline, which enables it to extract essential information selectively. This is complemented by a 'divide-and-conquer' strategy, which keeps the amount of input data manageable for the model. All these elements combined allow the T5 model to fine-tune for link prediction tasks, which essentially enables the model to handle large-scale, diverse graph data efficiently.

What’s next? As the LPNL framework demonstrates superior performance in link prediction over various advanced baselines, it sets the foundation for future explorations and applications of LLMs in graph-related tasks. The method’s capability to address the challenges of data volume and complexity in graphs opens doors to new possibilities. Subsequent research can further refine these techniques for even more efficient information extraction and prediction on complex networks, potentially leading to breakthroughs in fields relying heavily on graph analysis, such as social network dynamics and molecular structure prediction.

Papers of the Week:

AgentBoard: An Analytical Evaluation Board of Multi-turn LLM Agents

GraphiMind: LLM-centric Interface for Information Graphics Design

KAM-CoT: Knowledge Augmented Multimodal Chain-of-Thoughts Reasoning