The Memory Operating System for AI

Stay ahead of the curve with these hand-picked research highlights

Welcome, Watcher! This week in LLM Watch, each paper of the week will have its own highlight. We will be covering the following papers:

Energy-Based Transformers are Scalable Learners and Thinkers

AI4Research: A Survey of Artificial Intelligence for Scientific Research

NaturalThoughts: Selecting and Distilling Reasoning Traces for General Reasoning Tasks

Establishing Best Practices for Building Rigorous Agentic Benchmarks

Courtesy of NotebookLM

Energy-Based Transformers are Scalable Learners and Thinkers

TL;DR: Meta researchers introduce Energy-Based Transformers (EBTs) that outscale standard transformers by up to 35% on data efficiency while enabling System 2 thinking capabilities through energy minimization rather than traditional forward passes. (paper/code)

Energy-based models aren't new, but making them scale has been the holy grail. This paper cracks the code by unifying transformer architecture with energy-based learning. The key insight: instead of just predicting the next token, EBTs assign an energy value to input-output pairs and find solutions through gradient descent on this energy landscape.

Why it matters:

35% more data efficient than Transformer++ - meaning you'd need <20T tokens instead of 30T for equivalent performance

Enables breadth-first search reasoning rather than committing to single paths

Outperforms larger models (32B) with smaller architectures (7B) when properly trained

The key innovation is their training approach using "energy landscape regularization" - essentially teaching the model to create smoother, more navigable energy surfaces. This allows genuine System 2 reasoning to emerge from unsupervised learning alone.

Bottom line: If this scales to GPT-4 sizes, we might see a fundamental shift in how we build reasoning models. Less data, better reasoning, smaller models - what's not to love?

Join me at the Imperial Palace this September!

If you don’t know what TEDAI is, it’s an exclusive invite-only TED conference dedicated to Artificial Intelligence – with Vienna being one of the few select locations, and the only one in Europe.

Last year’s TEDAI Vienna 2024 has been the first of its kind and can be considered a huge success. Some of the most important decision makers from all over the world came together with leading AI experts for 3 days, including dinners, talks, workshops, and much more.

AI4Research: The Complete Survey of AI in Scientific Research

TL;DR: Comprehensive survey covering how AI is transforming scientific research across 5 key areas, from understanding papers to peer review, with practical resources and tools. (paper/code)

With models like DeepSeek-R1 and OpenAI o1 showing “superhuman” reasoning capabilities, the natural question is: can AI do actual scientific research? This massive survey (16 authors, 900+ references) provides a first answer.

The taxonomy breaks down into five core capabilities:

Scientific Comprehension - Understanding papers, charts, formulas

Academic Survey - Literature review and synthesis

Scientific Discovery - Hypothesis generation and testing

Academic Writing - Paper generation and structuring

Peer Review - Evaluation and critique

Key findings:

AI can now achieve human-level performance on literature synthesis tasks

Graph-guided retrieval outperforms traditional search by 15-18%

LLMs still struggle with truly novel hypothesis generation

Peer review remains the weakest link (too much hallucination)

Resources included: 13 domain-specific datasets, evaluation benchmarks, and a curated GitHub repo with implementations.

Bottom line: We're closer than ever to AI research assistants, but human creativity still reigns supreme for breakthrough discoveries.

WebSailor: Closing the Gap with Proprietary Web Agents

TL;DR: Alibaba's WebSailor matches DeepResearch performance on complex web tasks by teaching models to handle extreme uncertainty through synthetic "fog" data and novel RL training. (paper/code)

Remember when OpenAI's DeepResearch blew everyone's mind by solving "impossible" web research tasks? Alibaba just open-sourced the recipe. The main ingredient: recognizing that web agents need to navigate extreme uncertainty, not just follow instructions.

The innovation stack:

SailorFog-QA: Synthetic high-uncertainty tasks created by deliberately obscuring information

RFT Cold Start: Clean supervision from expert trajectories

DUPO Algorithm: Duplicating Sampling Policy Optimization for efficient agentic RL

Performance highlights:

12.0% on BrowseComp-en (matching proprietary systems)

30.1% on BrowseComp-zh

55.4% on GAIA

WebSailor-7B outperforms 32B parameter agents

The key insight: instead of training on clean, well-defined tasks, they create intentionally ambiguous problems that force the model to develop robust reasoning strategies.

Bottom line: The open-source community just caught up to OpenAI in web agents. Expect an explosion of applications in the next 6 months.

NaturalThoughts: Better Reasoning Through Data Curation

TL;DR: Systematic study reveals that distilling reasoning from strong models works best with difficult, diverse examples - contradicting the "less is more" hypothesis. (paper)

Everyone's trying to replicate o1 and o3's reasoning, but what training data actually works? This paper provides the first systematic answer by creating NaturalThoughts - carefully curated reasoning traces from DeepSeek-R1.

Key findings:

Scale matters: More data consistently improves performance (sorry, "less is more" believers)

Difficulty wins: Hard problems requiring diverse strategies transfer best

Mixed training helps: Combining System 1 (direct answers) and System 2 (reasoning) improves efficiency

Performance gains:

Beats OpenThoughts, LIMO, and other datasets on GPQA-Diamond, MMLU-Pro

Achieves strong results with just 1,000 carefully selected examples

Scales efficiently to 500K examples

The surprise: model disagreement (when teacher and student initially differ) is the best signal for valuable training data.

Bottom line: Quality AND quantity matter for reasoning. The path to better models isn't through clever tricks - it's through better data.

Best Practices for Rigorous Agentic Benchmarks

TL;DR: Systematic analysis reveals major flaws in popular agent benchmarks, proposes ABC checklist to ensure valid evaluation. (paper/code)

Agent benchmarks are exploding, but are they actually measuring what we think? This paper drops some uncomfortable truths: SWE-bench uses insufficient tests, TAU-bench counts empty responses as success, and estimation errors can reach 100%.

The two core validity issues:

Task validity: Does success actually mean the agent has the target capability?

Outcome validity: Does the evaluation correctly identify success?

Common pitfalls found:

Incomplete test coverage (patches can pass but still be wrong)

Shortcuts and "impossible" tasks

Reward hacking through edge cases

Poor grading of unstructured outputs

The paper introduces the Agentic Benchmark Checklist (ABC) - a systematic framework for building and evaluating benchmarks.

Bottom line: Half the agent benchmarks you see are probably broken. Use the ABC checklist before trusting any results.

Test-Time Scaling with Reflective Generative Models

TL;DR: MetaStone-S1 achieves o3-mini performance using a unified architecture that combines policy and reward models, eliminating 99% of reward model parameters. (paper/code)

While everyone's obsessed with inference-time compute, this paper shows how to do it efficiently. The breakthrough: Self-supervised Process Reward Models (SPRM) that share backbone weights between generation and evaluation.

Technical innovations:

Unified architecture: One model, two heads (generation + scoring)

Self-supervised training: No expensive process annotations needed

Dynamic step segmentation: Evaluates reasoning at natural breakpoints

Results:

Matches commercial models with 99% fewer parameters for reward modeling

Shows clear scaling laws: performance ∝ log(compute × parameters)

Compatible with MCTS and other search methods

The "aha moment": during training, the model suddenly develops the ability to discriminate between correct and incorrect reasoning paths.

Bottom line: We don't need separate massive reward models. Smart architecture beats brute force every time.

MedGemma: Google's Open Medical AI Models

TL;DR: Google releases MedGemma - open-source medical AI models (4B and 27B) achieving near-radiologist performance on chest X-rays and 87.7% on medical reasoning. (paper/model)

Google just open-sourced AI models that rival GPT-4's medical performance at 1/10th the inference cost. Built on Gemma 3, these models handle both images and text with clinical-grade capabilities.

Model variants:

MedGemma 4B: Multimodal, handles radiology, pathology, dermatology, ophthalmology

MedGemma 27B: Text-focused, excels at clinical reasoning and EHR interpretation

Performance highlights:

81% of generated chest X-ray reports rated clinically accurate by radiologists

87.7% on MedQA (within 3 points of DeepSeek R1)

State-of-the-art on FHIR data interpretation

Deployment ready: Runs on single GPU, with mobile deployment possible for 4B model.

Bottom line: High-quality medical AI is now accessible to any developer. Expect an explosion in healthcare AI applications.

A Survey on Latent Reasoning: Beyond Language

TL;DR: Comprehensive survey explores how LLMs can perform reasoning in continuous latent spaces rather than through tokens, potentially unlocking superhuman capabilities. (paper/code)

What if forcing AI to "think" in human language is holding it back? This survey examines the emerging field of latent reasoning - where models perform multi-step inference entirely in continuous hidden states.

Key paradigms:

Activation-based: Recurrent flows through same layers (vertical depth)

Hidden-state based: Sequential processing with carried state (horizontal length)

Masked diffusion: Infinite-depth reasoning through iterative refinement

Why it matters:

No token bottleneck: Richer representations than discrete vocabulary

Parallel reasoning: Can explore multiple paths simultaneously

Non-linguistic concepts: Access to reasoning patterns with no words

Current limitations:

Interpretability: Can't read the "thoughts"

Training complexity: Harder to supervise without explicit traces

Evaluation challenges: How do you score invisible reasoning?

Bottom line: Latent reasoning could be the key to truly alien intelligence. The trade-off: we might understand even less what our models are “thinking”.

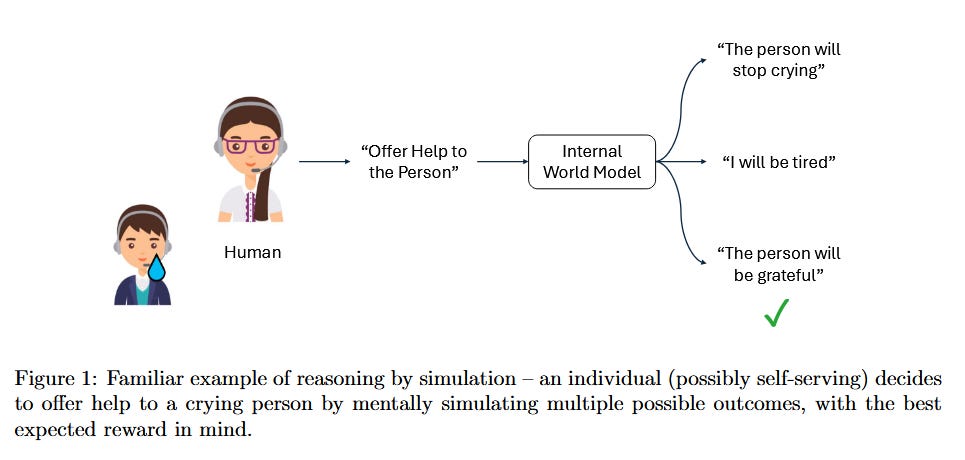

Critiques of World Models: From Dune to AGI

TL;DR: Philosophical essay argues world models should simulate "all actionable possibilities" for purposeful reasoning, proposes PAN (Physical, Agentic, Nested) architecture. (paper)

Starting from Frank Herbert's prescient navigators in Dune, this paper asks: what should a world model actually be? The authors critique current approaches as too narrow, arguing for models that capture possibility spaces, not just likely outcomes.

Core critiques:

Current models are too deterministic - real intelligence needs counterfactuals

Focus on perception misses the point - action possibilities matter more

Single-level representations are insufficient - need hierarchical abstractions

Proposed solution - PAN Architecture:

Physical: Grounded in real-world dynamics

Agentic: Centered on actionable possibilities

Nested: Multi-level representations from atoms to abstractions

The key insight: hypothetical thinking (imagining what could be) is more important than predictive accuracy for AGI.

Bottom line: We might be building world models wrong. They authors argue that true intelligence needs to imagine possibilities, not just predict probabilities.

MemOS: An Operating System for AI Memory

TL;DR: Revolutionary memory architecture treats memory as a first-class resource in LLMs, achieving up to 159% improvement over OpenAI's memory systems. (paper/code)

What if LLMs had a proper memory system like computers have operating systems? MemOS makes this reality, introducing unified memory management across parametric (weights), activation (attention), and plaintext (external) memory.

Core contribution - MemCubes:

Standardized memory units that can be composed, migrated, and evolved

Version control and lifecycle management built-in

Cross-agent memory sharing capabilities

Performance explosion:

159% improvement in temporal reasoning vs OpenAI

38.97% overall accuracy gain

60.95% reduction in token usage

94% lower latency with KV-cache injection

Architecture highlights:

Three-layer design (API, Scheduling, Storage)

"Next-Scene Prediction" for proactive memory preloading

Tree structure with graph-style semantic links

Bottom line: This could be as important for AI as virtual memory was for computers. Memory isn't just storage - it's the foundation for truly evolving AI systems.

The big question: As these capabilities combine, are we approaching systems that think in ways fundamentally alien to human cognition? And if so, how do we ensure they remain aligned with human values when we can't even understand their thoughts?