The Agentic Transformation Playbook

Send this to your favorite executive

In Brief: AI agents – autonomous software “co-workers” powered by advanced AI – are about to transform how enterprises operate. Forward-looking executives are piloting these agentic workflows now to gain efficiency, innovation, and strategic advantage. But realizing the promise of AI agents requires clarity of strategy: understanding what agents can and cannot do, learning from early successes in narrow domains, navigating a fast-evolving competitive landscape, and adopting these technologies with proper governance and change management.

This article will provide a comprehensive guide for CxOs, board members - and anyone else in the position to make decisions, really - to lead their organizations into the age of AI agents.

Understanding AI Agents and Agentic Workflows

An AI agent is essentially an intelligent software entity that can perceive its environment, make decisions, and take actions toward a goal with minimal human intervention. Unlike a static program, an AI agent often leverages generative AI and machine learning to interpret context, learn from data, and adapt its behavior. In practice, this means AI agents can autonomously execute multi-step tasks (e.g. retrieving information, transforming data, calling APIs, or initiating transactions) in response to high-level objectives rather than explicit step-by-step instructions.

Agentic workflows refer to business processes partially orchestrated by these AI agents (often multiple agents working together), exhibiting goal-directed autonomy. This is a significant evolution from traditional automation. In the past, automation meant scripting repetitive tasks or using RPA bots that follow rigid rules. By contrast, AI agents bring capabilities like:

Contextual understanding and reasoning: Agents use AI (including large language models) to understand unstructured inputs (documents, conversations) and apply complex rules or context (e.g. regulations, prior history) to each situation. They don’t just follow a fixed “if-this-then-that” script – they can interpret intent and nuanced conditions.

Learning and adaptation: Agents can improve over time by learning from new data and feedback. For example, a fraud-detection agent might self-tune its thresholds as it encounters new fraud patterns. This self-learning contrasts with legacy systems that require manual reprogramming to handle new scenarios.

Proactive action: Whereas legacy automation waits for a trigger or follows a schedule, AI agents can proactively initiate next steps toward their goals. They can monitor incoming data or events and determine “what’s next” without a human prompt. For instance, an agent might observe a delay in a shipment and automatically reorder inventory or notify customers, actions a static system wouldn’t take on its own.

Collaboration and orchestration: Multiple AI agents can work in concert, passing tasks among themselves like a well-trained team. One agent might specialize in data gathering, another in analysis, and another in executing transactions – all coordinating to complete a complex workflow (we already see prototypes of this in multi-agent financial trading and fraud investigation systems).

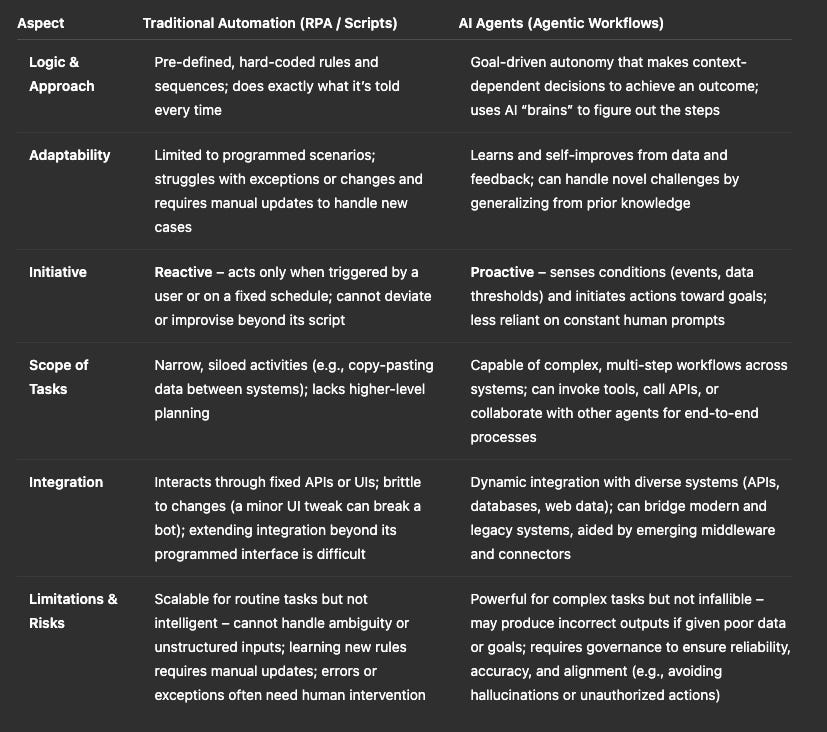

How AI Agents Differ from Traditional Automation: The table below summarizes key differences between classic rule-based automation and modern agentic AI workflows:

Table: Traditional Automation vs. AI Agentic Workflows. AI agents build on but fundamentally extend what previous automation could do – they introduce adaptive, cognitive capabilities into processes that were once strictly rule-bound.

Example: A traditional software bot might move customer data from a form into a database using fixed field mappings. An AI agent, by contrast, could accept an email from a customer in natural language, interpret the request (using NLP to extract intent and details), cross-check policies or past interactions, then update the database and trigger further actions (like sending a custom acknowledgment or creating a follow-up task) – all without a human explicitly programming each step.

Crucially, AI agents are designed for dynamic environments. Or in the words of my colleagues at PwC, these agents “adapt dynamically to changing environments, making hardcoded logic not just obsolete, but impossible”.

This unlocks new possibilities, but it also introduces new challenges in predictability and control. That is where agentic workflows are coming in: instead of going for full agentic automation - which could have terrible consequences - we choose carefully which parts of our processes we want to automate, and where we want to remain in control. It’s a hybrid approach between fixed workflows and autonomous AI agency.

Current Limitations: Despite their promise, today’s AI agents have important limitations executives must recognize:

Reliance on Data and Tools: Agents are only as smart as the data and functions available to them. If an enterprise has fragmented, poor-quality data or lacks APIs for key systems, an AI agent’s effectiveness is curtailed. (Nearly 48% of IT leaders worry their data foundation isn’t ready for AI, reflecting this concern.)

Oversight & Judgment: These agents, no matter how advanced, do not possess true human judgment or accountability. They operate based on patterns and objectives we give them. As such, a “human-above-the-loop” approach remains essential. In sensitive domains like finance, agents should complement human expertise, not completely replace the critical thinking and ethical judgment that managers and domain experts provide.

Emergent Errors: Generative AI-based agents can sometimes produce incorrect or unexpected outputs (e.g. a hallucinated report or an overly aggressive action) without proper constraints. Without governance, an autonomous agent might inadvertently expose sensitive information or make decisions that conflict with policy. Rigorous testing, guardrails, and fail-safes (like requiring human approval for high-impact decisions) are needed to mitigate such risks.

Integration Challenges: Many legacy enterprise systems weren’t built with AI agents in mind. Limited APIs and security restrictions can prevent an agent from performing actions end-to-end. In fact, while a lot of enterprises had pilot projects for agentic AI in early 2025, only a few of them had fully deployed agents in production workflows – often due to integration, security, or infrastructure hurdles. We will discuss how to overcome these obstacles in the strategy section below.

In short, AI agents represent a new frontier beyond traditional automation – one of adaptive, context-aware automation. Understanding these capabilities and constraints is the first step in crafting an AI agent strategy. Next, let’s explore concrete use cases where agentic AI is already delivering value, especially in finance-related industries.

Use Cases and Success Stories

The financial sector has been an early proving ground for AI agents, given its data intensity and need for intelligent automation. Below we highlight several concrete use cases and success stories of AI agents in finance, banking, and tax – with an emphasis on measurable outcomes:

Tax Compliance and Processing: Professional services are leveraging AI agents to transform tax workflows. For example, PwC’s tax division reports that their AI-agent-powered system can prepare complex K-1 tax schedules in one day – a task that used to take nearly two weeks by human teams. These agents handle data extraction from various documents, apply up-to-date tax rules, and even learn from past filings. They also proactively scan for regulatory changes across jurisdictions, reducing the risk of non-compliance and alerting teams to new tax law updates in real time. The outcome is not just speed but improved accuracy and foresight – one PwC tax agent continuously analyzed global tax law changes to help a client avoid penalties, providing forward-looking insights that manual methods missed. In sum, AI agents are delivering more timely outputs, cost reductions, and strategic insights in tax operations.

Real-Time Fraud Detection and Risk Management: Autonomous AI agents excel at monitoring streams of transactions or trades, spotting anomalies, and acting faster than any human. In a card fraud context, a network of AI agents can each take on specialized roles – one monitors user behavior patterns, another evaluates transaction risk in real time, and a third can automatically intervene (blocking a transaction or initiating a verification prompt) if fraud is suspected. For instance, a major credit card issuer deployed GenAI in its fraud department in early 2024 and saw immediate gains - these frameworks are now being upgraded to agentic workflows that further improve performance. The agents flag fraudulent charges within seconds and initiated automated customer notifications and card locks, reducing fraudulent losses. Sooner than later, such agents might be working together to provide continuous, adaptive protection – forecasting emerging fraud trends and coordinating responses across the enterprise. The key measurable outcome is agility: what used to take hours (or required customers to notice and report fraud) can now be handled near-instantly by agentic systems, saving money and bolstering customer trust.

Financial Planning and Analysis: In corporate finance and wealth management, AI agents serve as tireless analysts. They can consolidate financial data from disparate sources, perform analysis, and even generate narrative reports or recommendations. For example, an AI agent used by a global investment firm monitors economic news, market prices, and portfolio data continuously. It autonomously alerts human portfolio managers of potential rebalancing moves, sometimes even executing small trades within preset limits. Robo-advisor platforms already utilize such agentic logic – adjusting client portfolios in real-time as markets move, which has been shown to slightly improve returns and mitigate risk through faster reaction times (compared to quarterly manual adjustments). One wealth management firm reported that after introducing an AI agent to assist advisors, they could handle 30% more clients with the same staff, thanks to the agent automating routine analyses and client queries. These gains translate into tangible ROI via higher AUM (assets under management) per advisor and enhanced client retention (since the agent provides more timely, tailored advice).

Accounting and Finance Operations: In the realm of accounting, AI agents automate routine yet critical tasks – invoice processing, bookkeeping reconciliation, expense audits, financial forecasting, etc. These agents read invoices or receipts (using OCR and NLP), cross-verify entries with purchase orders and ledgers, and flag discrepancies. The result is faster book closing and fewer errors. In fact, AI agents have been shown to reduce human error in accounting and improve compliance by consistently applying controls. Companies leveraging agents for accounts payable and receivable processing have seen metrics like invoice cycle time cut in half and material improvements in working capital management because anomalies (like overdue invoices or payment errors) are caught and addressed promptly by the agent. A Big Four audit firm also noted that AI agents performing first-pass compliance reviews free up their human accountants to focus on complex judgment calls, effectively “augmenting” the workforce and increasing overall productivity.

These examples scratch the surface, but they illustrate a common theme: AI agents drive value by accelerating processes, improving accuracy, and unlocking human capacity for higher-value work. In heavily regulated domains like finance and tax, it’s often essential to ensure that every transaction or document is processed with the same diligent rules (and any deviation can be logged and explained), which helps with auditability. It’s important to highlight that in each case, outcomes are measurable: whether it’s a 90% reduction in turnaround time, a percentage reduction in cost, or a boost in capacity and revenue, the ROI of well-deployed agents can be quite tangible.

Key Takeaway: In finance-related industries, AI agents aren’t science fiction – they are delivering results today. A survey from Gartner found 58% of finance departments were using AI agents in some form by 2024, up from 37% the year before. Those implementations range from AI chatbots in retail banking to autonomous auditing tools in tax. The success stories so far demonstrate not only efficiency gains but also new capabilities (e.g. real-time predictive insights) that give adopters a competitive edge.

However, capturing that edge requires understanding the broader competitive landscape. As more firms embrace agentic AI, simply having agents is not enough – the how and where you deploy them will differentiate leaders from laggards.

Industry Trends and Competitive Landscape in the Age of AI Agents

The rise of AI agents is reshaping competitive dynamics across sectors. Leading incumbents and startups alike are racing to integrate agentic AI, and several key trends have emerged:

Rapid Increase in Pilot Projects: Over the last two years, interest in AI agents has surged in the enterprise. Yet, moving from pilot to production has proven challenging for most companies. This indicates many organizations are experimenting, but only a few have scale agents organization-wide. Those who crack the code of scaling (addressing integration and governance challenges) will have a head start in operational AI excellence.

Future Ubiquity in Applications: Analysts predict that agentic AI will become a standard part of software in the coming years. In 2024, projections stated that one-third of enterprise applications will include agentic AI capabilities by 2028, up from virtually none in 2024. Since then, this trend hasn’t slowed down - if anything, it has further accelerated. In other words, AI agents (or “copilots”) will be embedded in many software tools workers use, from ERP systems to CRM platforms, automatically handling tasks in the background. Forward-thinking enterprises and software vendors are thus positioning now to build or host these agents, much like the early moves to cloud or mobile a decade ago.

High Stakes in Financial Services: Financial and banking firms are particularly aggressive in agent adoption. The financial sector is the second-largest consumer of AI (generative and agentic) after tech itself, and banks historically outspend other industries on new technology. We’re seeing big banks partner with AI providers and even develop proprietary agent frameworks. For example, Ally Bank built an internal platform (Ally.ai) to host multiple large language models and is now experimenting with autonomous agents as the “next level” of AI – moving from just using LLMs for information to using LAMs (“Large Action Models”) that can act on information directly. This notion of LAMs underscores a trend: AI is evolving from simply answering questions (chatbots) to taking actions (agents), and financial institutions want to harness that for competitive advantage (e.g. automating trade execution, or automatically adjusting pricing/credit terms in response to market changes).

Competitive Differentiator – ROI and Performance: Simply adopting AI agents isn’t a guarantee of success; how effectively a company deploys and governs them is becoming a key differentiator. A recent IBM study found that while many companies saw pilot AI projects with sky-high short-term ROI (30%+), those returns often leveled out to single digits when scaling up. In fact, CEOs report only ~25% of AI initiatives have delivered the expected ROI to date. However, the top 10% of organizations (the AI leaders) are achieving nearly 18% ROI and sustained growth in profits from AI. What sets them apart? These leaders treat AI (including agents) not as ad-hoc experiments but as strategic, enterprise-wide programs – reengineering core workflows and building strong supporting capabilities. In practice, that means investing in things like unified data platforms, talent upskilling, and robust governance (topics we will cover in the next section). The competitive landscape is thus bifurcating: those who integrate AI deeply into their operations vs. those doing piecemeal automation. The former are seeing compounding advantages – faster processes, better insights, happier customers – which translate to market share gains and cost leadership over time.

BigTech and Startups Fueling an Ecosystem: The tech industry is rapidly enabling the age of agents. In 2024, autonomous agents saw the biggest growth in VC deal activity, outpacing other hot areas like generative AI for customer support. Startups specializing in agentic AI (for example, companies that offer AI agents for IT support or sales prospecting) are attracting significant funding. At the same time, Big Tech players – Microsoft, Google, Amazon, Salesforce, IBM, etc. – are embedding agent capabilities into their clouds and enterprise software offerings. Microsoft has introduced “Copilots” across its Office and developer tools; Salesforce is piloting “Agentforce” to allow AI agents to operate on CRM data with proper guardrails. Anthropic, an AI startup backed by Google, has proposed a standard called Model Context Protocol (MCP) to help agents better interface with tools and APIs, and this is gaining support from industry leaders. The message is clear: a rich ecosystem is forming to accelerate agent adoption, and companies have a growing menu of platforms and partners to choose from. Those who engage early with this ecosystem – through partnerships, investments, or early adoption – can influence its direction and capitalize on the latest capabilities.

Evolving Regulatory and Ethical Standards: As agentic AI proliferates, regulators are taking note. The EU’s upcoming AI Act and various industry guidelines are beginning to address autonomous decision-making systems. Financial regulators, in particular, want assurances that AI-driven decisions (like loan approvals or trading moves) are explainable and fair. This external pressure means that companies positioning themselves as AI leaders need to also lead in compliance and ethics. Many large firms are already updating their AI governance policies to explicitly cover AI agents – ensuring, for instance, that an autonomous agent’s decisions can be audited and that humans can override or intervene when needed. We see competitive advantage here as well: organizations that cultivate trust (with customers, regulators, and the public) in their AI agent deployments will have smoother sailing and potentially a reputational edge. For example, one global bank publicly emphasized that all its AI agents operate under a “human-in-command” principle and underwent bias testing before launch, which helped reassure corporate clients to embrace its AI-driven services. Building this trust layer is becoming part of competing effectively with AI.

Bottom Line: The competitive landscape around AI agents is dynamic and fast-moving. Early movers in leveraging agentic AI – especially in data-rich, complex industries like finance – are already reaping benefits and reshaping customer expectations. But sustaining an edge requires more than enthusiasm; it demands a strategic approach to adoption. In the next section, we turn to practical strategies for piloting, scaling, and governing AI agents responsibly, so that your enterprise can navigate this landscape successfully.

Practical Adoption Strategies: Piloting, Scaling, Governance, and Risk Mitigation

Adopting AI agents in an enterprise is not a plug-and-play endeavor – it’s a journey that touches people, processes, and technology. Leaders must approach it deliberately, balancing innovation with oversight. Here we outline practical strategies, in a step-by-step fashion, to pilot and scale AI agents while managing risks:

1. Start with high-impact pilot projects (but plan for scale). Successful AI agent programs often begin with targeted pilots in areas that “move the needle”. Focus on workflows that are high-volume, repetitive, and pain points for your organization – where an agent’s efficiency gains would be clearly measurable (e.g. employee onboarding, level-1 IT support tickets, invoice processing). The goal of the pilot is twofold: demonstrate quick wins (e.g. a pilot agent cuts processing time by 50% or saves X hours of labor in a month) and gather learnings for scale. Define clear KPIs up front – cycle time, error rate, customer satisfaction, cost per transaction, etc – so you can quantify the agent’s impact. However, don’t fall into the trap of scaling a pilot solely based on a single metric improvement (e.g. a 5% boost in one metric) without holistic evaluation. After the pilot, perform a rigorous cost-benefit analysis: what are the costs to implement the agent at enterprise scale (integration effort, training, maintenance) versus the projected benefits? Ensure the process is truly replicable and not a special-case scenario. Many companies find that a pilot’s context is controlled – scaling may introduce data variability or system conflicts that temper results. So, use pilots to learn and refine the approach. For instance, if an AI customer service agent pilot shows a 5% reduction in churn, dig deeper: did it handle all customer types? What failed cases were observed? Early piloting should also test the waters for user acceptance – e.g. do your employees trust the agent’s suggestions? Gathering feedback from users (employees or customers) is crucial at this stage. In short, start small, measure diligently, iterate – and only then scale fast into production.

2. Invest in robust data and integration infrastructure. One recurring lesson from early adopters is that organizational readiness (or lack thereof) is a bigger barrier than the AI tech itself. To successfully deploy AI agents, enterprises need to break down data silos and upgrade system integrations. Begin by assessing your data foundations: Are your core data (customer data, transaction records, knowledge bases, etc.) accessible and clean enough for an AI agent to use? Many organizations find they must first unify data platforms or implement data lakes/warehouses to give agents a 360° view of information. Security and access control are equally important – agents often need rights to read and write in multiple systems, which requires IT architecture work (single sign-on, API gateways, etc.). In practice, this might involve creating new APIs for legacy systems that an agent must interface with, or using middleware to connect an agent orchestrator to various enterprise software. Anthropic’s MCP (Model Content Protocol) has emerged as a framework to standardize how AI agents connect with tools and data, backed by major players like Microsoft and OpenAI. Keeping an eye on such standards can guide your integration strategy. On the compute side, ensure you have the infrastructure to run agents: they may require substantial processing power or cloud services (especially if running 24/7 or using large models). A company unprepared for the surge in API calls or GPU workloads could see latency or cost overruns. One global retailer discovered their AI inventory agent was making thousands of API calls to check stock levels, causing system strain; they had to redesign data caching and increase throughput capacity before wider rollout. The takeaway is clear – treat data and integration prep as phase zero of AI agent deployment. By strengthening data pipelines, cleaning data, and setting up flexible integration points, you set your agents up for success. Remember, “AI agents are only as reliable as the data they act upon”, so make fortifying your data foundation a top priority.

3. Embed governance and risk management from day one. Because AI agents operate with autonomy, having strong governance is non-negotiable. Avoid viewing agent deployments as purely technical projects – involve your risk, compliance, and IT security teams early. Many organizations are now adapting their Responsible AI frameworks to cover agentic AI explicitly. This includes establishing policies on what decisions an AI agent is allowed to make independently versus where human approval is required. A practical step is to incorporate agents into your existing AI inventory or register – catalog each agent, its purpose, the data it accesses, and an assigned risk level. For instance, an agent handling sensitive customer data or executing financial transactions would be tagged as “high risk” and warrant more frequent reviews, whereas an agent summarizing meeting notes might be “low risk.” Align these tiers with governance: high-risk agents might require more stringent testing, documentation, and perhaps real-time monitoring or auditing of their actions. Technical controls are also essential. Implement safeguards like data anonymization or masking for any agent that touches personal or confidential data (so even if the agent’s AI model is in the cloud, it isn’t seeing raw sensitive info). Use data loss prevention (DLP) tools to monitor the agent’s outputs – for example, if an agent inadvertently tries to include a customer’s account number in an email, the DLP system could catch and redact it. Logging is your friend: ensure all agent decisions and actions are logged with time stamps and relevant context. These logs become invaluable for audits, debugging, and improving the agent over time. Another best practice is to establish a “human-in-the-loop” checkpoint for critical processes. You might configure an agent such that any transaction above $100,000 or any unusual recommendation (say, denying a VIP customer’s request) is routed to a human for sign-off. This not only mitigates risk but also builds trust with stakeholders that AI isn’t running wild. Finally, incorporate scenario testing and red-team exercises: deliberately stress test the agent with adversarial inputs or tricky cases to see how it copes and to shore up its responses. Governance is often seen as a brake, but in truth it’s what will enable you to scale AI agents confidently. By addressing privacy, security, and ethical risks up front, you clear a path for broader innovation without later fires to extinguish. As Gartner predicts, companies with robust AI governance in place will suffer far fewer incidents and enjoy smoother adoption than those that rush ahead without guardrails.

4. Prepare your people and processes for change. The introduction of AI agents will ripple through your organizational culture and workflows. It’s critical to manage this change proactively. Start by communicating a clear vision: why is the company deploying AI agents, and how will they benefit employees, customers, and the business? Employees may fear that “autonomous agents” equate to job loss or loss of control. Leadership should emphasize that these agents are tools to augment teams – taking over drudgery and empowering employees to focus on higher-value tasks. For example, when implementing an AI agent in IT support, one CIO framed it to the team as “freeing you from password reset tickets so you can spend more time on strategic infrastructure improvements.” Such framing can turn skepticism into cautious optimism. Training and upskilling are also vital. Employees interacting with or managing AI agents need to understand their workings and limitations. New roles like “AI agent supervisors” or “prompt engineers” may emerge – staff who specialize in monitoring agent performance, refining their prompts or objectives, and handling exceptions. Encourage some team members to become champions of the technology, and give them additional training on the AI platform or toolset. Additionally, consider process adjustments: workflows might need tweaks to incorporate agents smoothly. In a customer service center, for instance, you might change the escalation process – a chatbot agent tries to handle a query first, but the workflow must seamlessly handoff to a human agent if it cannot resolve the issue or if the customer is unhappy. Those handoff rules and fallback procedures need to be designed and communicated. Change management should address emotional and cultural hurdles too. As Google’s AI adoption research notes, organizations should cultivate a “culture of innovation” with tolerance for experimentation and learning from failure. Employees should feel encouraged to provide feedback on the agents and suggest improvements or new use cases. One technique is to involve end-users in pilot design – for example, have your finance clerks co-create the requirements for an AI agent that will assist in invoice reconciliation, so they feel ownership. And always maintain transparency: if an AI agent interacts with customers or makes a recommendation to an employee, ensure it’s clear that it’s AI-generated (not human), and explain the logic when possible. This aligns with emerging AI ethics expectations and helps people trust the system. In summary, organizations that invest in people as much as technology – through communication, training, and an innovation-friendly culture – will adapt far more easily to having AI agents as part of the workforce.

5. Iterate, monitor, and scale up responsibly. After a successful pilot and initial deployments, scaling AI agents enterprise-wide should be a phased, iterative process. Avoid a big-bang rollout. Instead, gradually extend the agent’s reach to new departments or more complex tasks, while closely monitoring performance. Continuous monitoring is non-negotiable: set up dashboards to track the agent’s key metrics (throughput, accuracy, response times, error rates) and watch for drift or anomalies over time. AI agents may perform well on day one but could degrade if, say, underlying data changes or users find ways to ask things that confuse the model. Maintain a feedback loop where issues discovered in production trigger a review and improvement cycle for the agent. It’s wise to schedule periodic audits of each agent – e.g. quarterly reviews of its decisions, similar to how a manager would review an employee’s work, to ensure quality and compliance are holding up. On the technical side, keep agents updated: as AI models evolve (new versions of an LLM, for instance), evaluate if an upgrade can improve performance or reduce errors. Many companies treat their AI agents as “products” with version releases, rather than a one-and-done implementation. Scaling also means preparing for greater load and complexity. If you add 10 more processes for an agent to handle, does your infrastructure scale? Conduct capacity planning. Additionally, when scaling geographically or across business units, be mindful of differing requirements – an agent used in EU operations might need to adhere to GDPR data rules, for example, requiring localization of data handling. Incorporate regulatory developments into your scaling plans: keep an eye on new laws (like the EU AI Act’s classification of high-risk AI systems) that might impose requirements on your AI agent usage. It’s easier to bake compliance in from the start than to retrofit it. Finally, as you scale, continue building the business case and tracking ROI. What began as a single use-case pilot might evolve into dozens of agents contributing across the enterprise. Tally up the cumulative impact – e.g. hours saved annually, cost savings, revenue uplift from improved customer retention or faster go-to-market. These data points will help maintain executive and board support for further AI investments. They also allow you to refine strategy: you might find, for example, that agents deliver the best ROI in customer-facing processes, and thus decide to prioritize deploying more in that area versus internal processes (or vice versa). Avoid complacency – just because an agent is deployed, don’t assume it’s delivering optimum value forever. Just as you’d continuously improve workflows and retrain staff, your AI agents require ongoing optimization. In the end, scaling responsibly is about finding a sustainable, governed operating model for AI agents – one where they are ingrained in your business, continuously learning, and continuously overseen. Companies that achieve this will not only realize significant returns but also be able to trust AI as a core component of their operations.

By following these strategies – pilot smart, bolster data/tech foundations, govern tightly, ready your people, and iterate as you scale – enterprises can navigate the journey from initial AI agent experiments to wide-scale deployment. The reward is a new kind of organization: one that melds human creativity and judgment with machine efficiency and consistency. In the final section, we consider the emerging technologies enabling this vision and how they factor into maintaining competitive advantage.

Conclusion: Gaining Competitive Advantage with Agentic AI

AI agents represent a massive shift in enterprise technology – one where software moves from passive assistance to active, autonomous collaboration. For executives, leading your enterprise into this age of agentic AI is both a strategic opportunity and a leadership challenge. It requires vision to see the transformative potential, courage to invest and experiment, and diligence to govern and guide these powerful tools responsibly.

The payoff, however, is substantial. Organizations that harness AI agents effectively can achieve leaps in operational efficiency, decision quality, and innovation speed that were simply not possible with previous technologies. Imagine processes that run 24/7, continuously optimizing themselves, or personalized AI advisors that help every employee and customer make better decisions in real time. These are sources of competitive advantage that go beyond incremental improvement – they can redefine how business is done. A bank that deploys autonomous agents to detect fraud or assess credit risk in seconds will outperform one that relies on slower, manual processes; a tax advisory firm that uses AI agents to crunch complex regulations will win more clients with faster, more accurate insights compared to rivals still doing it the old way.

Yet, achieving this advantage comes down to execution. It’s fitting to recall that while 75% of companies have dabbled in AI, only a quarter have seen the ROI they expected. The leaders are those who treated AI (and specifically agentic AI) as a strategic capability to build, not just a shiny object. They integrated it into core business functions, invested in people and governance, and aligned it with their value proposition.

For the C-suite readers: the time to act is now. The playing field is fast evolving. By 2030, autonomous AI agents will likely be at the core of many business operations. Your competitors – whether incumbent peers or digital-native upstarts – are not standing still. In fact, as noted, startups and Big Tech are actively pushing agentic AI into the mainstream, and fast movers in various industries are already accumulating learning and benefits. To avoid falling behind, you should begin (or accelerate) the journey: identify champion use cases, set up the needed infrastructure, and establish strong governance and cross-functional teams to drive adoption.

Equally important is the responsible deployment of AI agents. In an era of heightened scrutiny on AI’s ethical and societal impacts, business leaders must ensure that agentic AI is implemented with fairness, transparency, and accountability in mind. Doing so is not just about avoiding negative incidents; it builds trust. Customers and regulators will gravitate towards companies that can confidently explain and stand behind their AI-driven services. This trust becomes part of your brand reputation – a competitive asset in its own right.

As you lead your enterprise into the age of AI agents, keep a balanced perspective: combine bold ambition with prudent oversight. Encourage innovation – let teams reimagine processes with AI, pilot creative agent use cases – while also setting clear “guardrails” as discussed. Foster a culture where human expertise and AI agents work hand-in-hand, each enhancing the other. The most successful companies will be those where employees are not displaced by AI but elevated by it – freed from grunt work and equipped with AI-augmented insights to excel in their roles.

Finally, maintain a long-term view. Today’s AI agents are impressive, but tomorrow’s will be even more capable and ubiquitous. By investing in this area, you are not just solving today’s problems; you are building a foundation for continual adaptation and agility. In a business environment where change is the only constant, having autonomous, learning agents embedded in your enterprise can become a superpower – a force multiplying your capacity to sense and respond to change.

In conclusion, the emergence of agentic AI is a defining moment for enterprise leaders. Those who embrace and responsibly deploy AI agents will be the ones to “lead in both innovation and resilience” in the years ahead. They will set the pace in their industries, leveraging AI not just for cost savings but to offer new value to customers and to reinvent how work gets done. The age of AI agents is dawning; with the right strategy, it can usher your enterprise into a new era of competitive advantage and growth.

"""

For instance, a major credit card issuer deployed AI agents in its fraud department and saw immediate gains: the agents flagged fraudulent charges within seconds and initiated automated customer notifications and card locks, reducing fraudulent losses by an estimated 20% in the pilot region.

"""

Is there a chance you could share a link to learn more about this case?

AI AGENT that is able to process Visa's 1.7k transactions per second. That is a thing I would love to read about

Feedback on this one? It was a bit of an experiment, but I felt like the topic was too important to not write about it.