State of AI Agents: Google Goes All-In on Agents

And OpenAI introduces long-term memory for ChatGPT

Welcome to State of AI Agents! Last week marked a decisive turning point for AI agents, with multiple tech giants unveiling ambitious agent strategies, frameworks, and deployments. This shift signals a fundamental transformation in how AI will be integrated into software and services going forward.

Let’s analyze what happened, what it means, and where this technology is headed next.

1. Big Updates from Big Tech

The past weeks witnessed several major announcements that collectively signal AI agents are rapidly moving from experimental concepts to core product strategies across the tech industry.

Let's examine the most significant developments:

Google's Comprehensive Agent Ecosystem

Google made its most ambitious agent-focused move yet, introducing a suite of tools specifically designed for agent development and deployment. At the center is the newly released Agent Development Kit (ADK), an open-source framework that provides standardized building blocks for creating and deploying AI agents.

The ADK addresses key challenges in agent development by offering components for prompt orchestration, memory systems, tool use, and multi-agent coordination. This reduces the complexity of building sophisticated agents that can reason, plan, and act across different environments.

Alongside the ADK, Google unveiled Agent2Agent (A2A), an open protocol for secure agent communication. A2A establishes standardized methods for agents to exchange messages and coordinate actions across platforms and applications, with support from over 50 enterprise partners including Atlassian, MongoDB, and Salesforce.

Google's Cloud AI chief described these developments as heralding an "age of inference" - a new paradigm where AI systems proactively deliver insights rather than just responding to direct queries. This represents a fundamental shift in Google's AI strategy, prioritizing autonomous agents that can take initiative rather than just responding to prompts.

The company also enhanced its Agentspace platform for enterprise environments, enabling employees to invoke AI agents directly from the Chrome browser. A no-code Agent Designer tool allows non-technical users to create custom agents, while pre-built agents for "Deep Research" and "Idea Generation" showcase the platform's capabilities out-of-the-box.

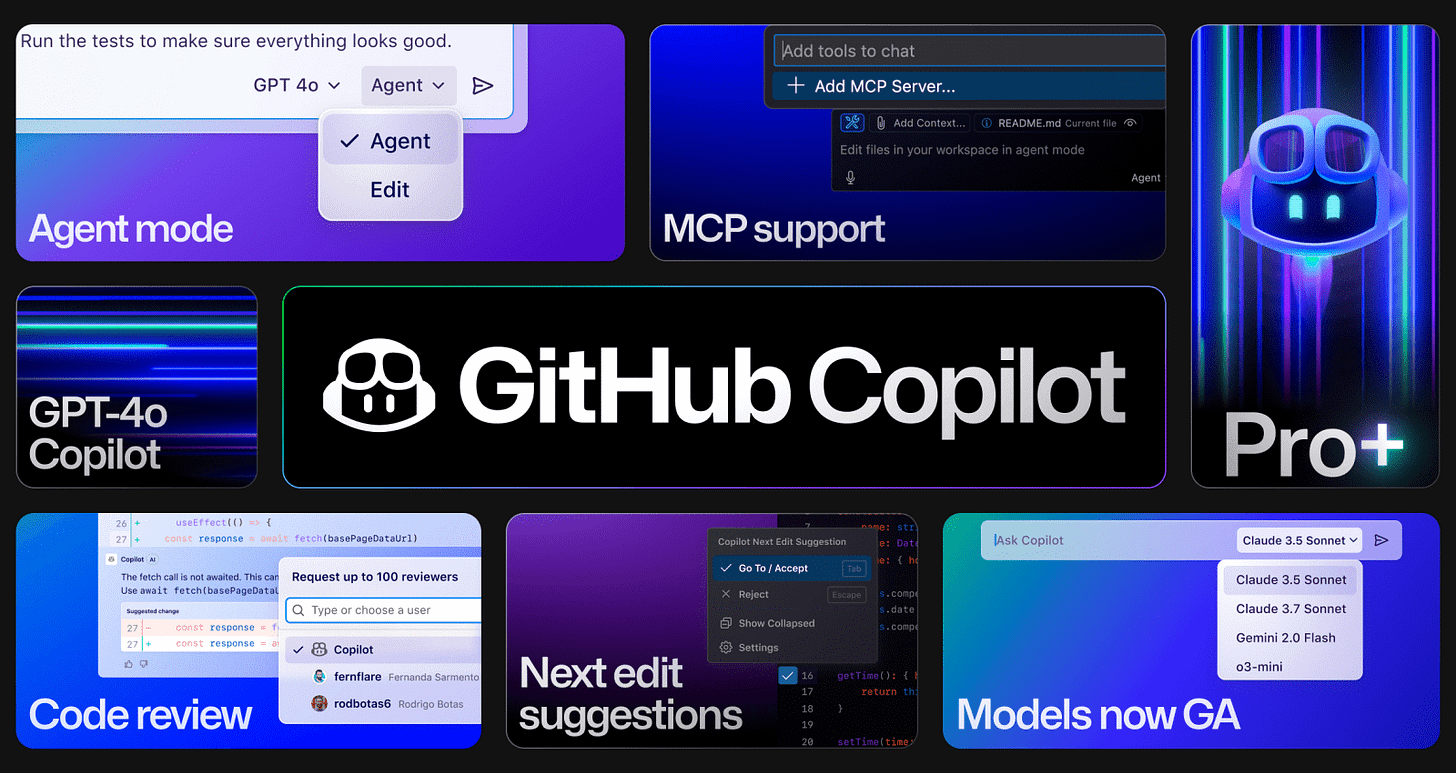

GitHub Copilot Transforms into an Autonomous Coding Agent

Microsoft finally released Github Copilot’s agent mode to the wider public. The feature had been in Beta for quite a while and an official release was long overdue (considering the fierce competition in this space). In agent mode, Copilot can autonomously work across an entire software project - from suggesting terminal commands and diagnosing errors to generating code and tests - all in pursuit of user-defined high-level goals.

The capabilities were demonstrated with a real-world example where Copilot was asked to "update a website for runners to sort races by different criteria." The agent:

Analyzed the existing codebase architecture

Identified the necessary components requiring modification

Updated backend logic to enable the sorting functionality

Revised UI components to expose the new sorting options

Generated unit tests to validate the changes

Returned control to the developer with the complete implementation

As GitHub CEO Thomas Dohmke explained, "agent mode takes Copilot beyond answering a question, instead completing all necessary subtasks to ensure your primary goal is achieved."

For a lot of GitHub Enterprise users this update might be good news, as it could enable them to integrate coding agents deeper into their projects and workflows - without compromising on data security.

Microsoft's Ecosystem-Wide Agent Strategy

Beyond GitHub, Microsoft recently revealed broader plans to extend AI assistant capabilities across its ecosystem, transforming them from utility tools to personalized digital companions. The updated Microsoft 365 Copilot will now maintain context about users' lives and preferences, learning about projects, tastes, and even personal details to tailor interactions.

New capabilities rolling out include:

Deep Research: For conducting multi-step research tasks with persistent context

Actions: Executing tasks like booking tickets on the user's behalf

Pages: AI-curated canvas for organizing notes and content

Vision: Interpreting and responding to visual information

Microsoft described this evolution as creating "a new kind of relationship with technology" where AI systems understand users in the context of their lives and adapt accordingly.

OpenAI Implements Long-Term Memory for ChatGPT

Not to be outdone, OpenAI expanded ChatGPT's capabilities with a significant new feature: the ability to reference all past conversations with individual users. This effectively gives the AI a persistent memory, allowing it to build up context and personalization over time.

OpenAI CEO Sam Altman emphasized that this update "points at something we are excited about: AI systems that get to know you over your life, and become extremely useful and personalized." While users can opt out or have "off the record" conversations, the direction is clear – OpenAI envisions AI agents that continuously learn about individual users to better serve them.

This update blurs the line between a chatbot and an autonomous digital advisor by enabling the accumulation of knowledge about user needs, preferences, and habits over extended periods.

2. Technical Infrastructure for the Agent Revolution

As AI agents move from research to production, the underlying technical infrastructure is evolving to support more sophisticated agentic systems. Let’s talk about several critical developments:

MCP in the Cloud - Connecting and Scaling Agents

The Model Context Protocol (MCP), an emerging standard for tool-using agents, received significant boosts from two announcements. First, Cloudflare launched a remote MCP server service that allows developers to host their MCP endpoint in the cloud.Previously, MCP implementations (which enable AI agents to call external APIs and services) typically had to run locally, limiting production deployment. Cloudflare's offering means agents can now easily connect to web services through a scalable cloud API without each developer maintaining their own infrastructure.