🗣️ - OpenAI Reaching for Omnipotence

GPT-4 may be omni now, but it's far from omnipotent

In this issue:

GPT-4o speaks for itself

Linking concepts across multiple documents

Turning scientific plots into code

1. Hello GPT-4o

Watching: GPT-4o (blog)

What problem does it solve? GPT-4o aims to make human-computer interaction more natural and efficient by accepting and generating various combinations of text, audio, image, and video. Previous approaches, like Voice Mode for ChatGPT, relied on separate models for transcription, text processing, and audio generation, resulting in information loss and increased latency. GPT-4o addresses these issues by processing all inputs and outputs within a single neural network, enabling faster response times and preserving important contextual information.

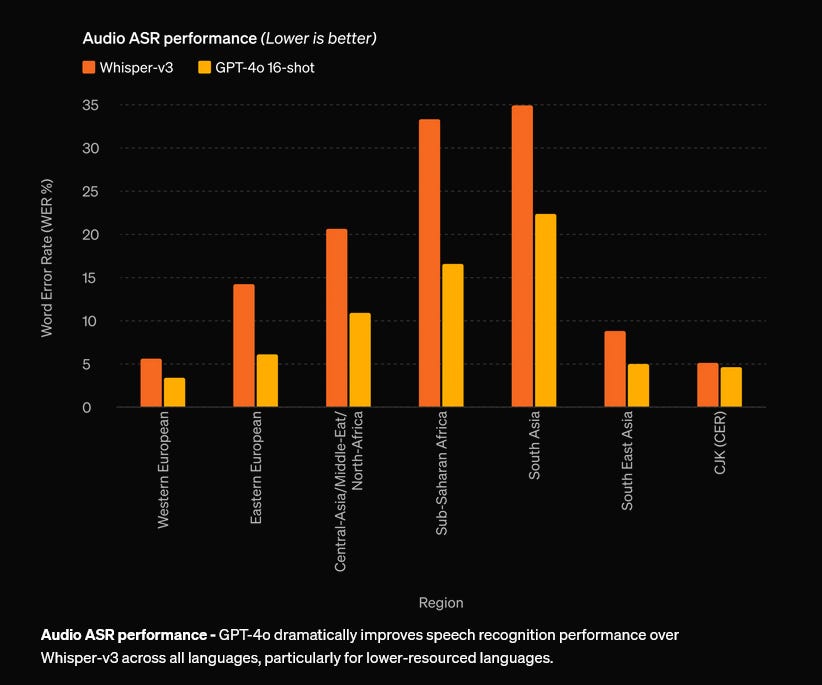

How does it solve the problem? GPT-4o is trained end-to-end across text, vision, and audio modalities, allowing the model to directly observe and process tone, multiple speakers, background noises, and other contextual information. This unified approach eliminates the need for separate models and pipelines, reducing latency and preserving valuable information that can enhance the quality of the model's responses. GPT-4o can respond to audio inputs in as little as 232 milliseconds on average, which is comparable to human response times in conversation. Additionally, it matches GPT-4 Turbo's performance on English text and code while significantly improving performance on non-English languages and being faster and more cost-effective.

What's next? Researchers and developers will likely focus on investigating new applications that leverage its multi-modal capabilities. Additionally, the reduced latency and cost will further enable use cases that have been deemed too expensive to realize.

2. PromptLink: Leveraging Large Language Models for Cross-Source Biomedical Concept Linking

Watching: PromptLink (paper/code)

What problem does it solve? Biomedical concept linking, or aligning concepts across diverse data sources, is crucial for integrative analyses in the biomedical domain. However, this task is challenging due to the differences in naming conventions across various datasets. Traditional methods, such as string-matching rules, manually crafted thesauri, and machine learning models, are limited by their reliance on prior biomedical knowledge and struggle to generalize beyond the available rules, thesauri, or training samples.

How does it solve the problem? PromptLink leverages the power of Large Language Models (LLMs) to address the limitations of traditional biomedical concept linking methods. The framework consists of two main stages. First, a biomedical-specialized pre-trained language model generates candidate concepts that fit within the LLM's context windows. Second, an LLM is utilized to link concepts through a two-stage prompting process. The first-stage prompt elicits the LLM's rich biomedical prior knowledge for the concept linking task, while the second-stage prompt encourages the LLM to reflect on its own predictions, enhancing their reliability.

What's next? PromptLink demonstrates the potential of LLMs in tackling complex biomedical NLP tasks. The framework's generic nature, without reliance on additional prior knowledge, context, or training data, makes it well-suited for linking concepts across various types of data sources. Future research could explore the application of PromptLink to other domains and investigate ways to further optimize the prompting process for improved performance and efficiency.

3. Plot2Code: A Comprehensive Benchmark for Evaluating Multi-modal Large Language Models in Code Generation from Scientific Plots

Watching: Plot2Code (paper/dataset)

What problem does it solve? While Multi-modal Large Language Models (MLLMs) have shown impressive performance in various visual contexts, their ability to generate executable code from visual figures has not been thoroughly evaluated. Existing benchmarks and datasets often lack the necessary diversity and quality to comprehensively assess the visual coding capabilities of MLLMs across different input modalities and plot types.

How does it solve the problem? Plot2Code addresses this issue by introducing a carefully curated visual coding benchmark. The dataset consists of 132 high-quality matplotlib plots spanning six plot types, sourced from publicly available matplotlib galleries. Each plot is accompanied by its corresponding source code and a descriptive instruction generated by GPT-4. This multi-modal approach allows for an extensive evaluation of MLLMs' code generation capabilities across various input modalities. Additionally, Plot2Code proposes three automatic evaluation metrics: code pass rate, text-match ratio, and GPT-4V overall rating, enabling a fine-grained assessment of the generated code and rendered images.

What's next? The evaluation results from Plot2Code reveal that most existing MLLMs struggle with visual coding for text-dense plots and heavily rely on textual instructions. Plot2Code serves as a valuable resource for the ML community, providing a standardized benchmark to guide the future development of MLLMs. By addressing the limitations exposed by Plot2Code, researchers can work towards improving the visual coding capabilities of MLLMs, enabling more effective code generation from visual inputs across a wide range of plot types and complexity levels.