Kimi K2: What It Is, How It Works, and Why You Should Care

Another open source lesson for the US and A

TL;DR: While major players like OpenAI, Anthropic, and Google dominate headlines with their closed-source offerings, a new model from Moonshot AI has appeared that challenges the status quo - and just like last time with DeepSeek-R1, completely out of the blue for most people in the West. Kimi K2’s “Open Agentic Intelligence” brings trillion-parameter scale capabilities to the open source community - with unprecedented efficiency.

In this overview, we'll explore the technical contributions behind Kimi K2, highlight its unique approach to agentic behavior, and understand why this release matters for the broader AI ecosystem.

What we'll cover in this article:

The mixture-of-experts architecture powering Kimi K2's efficiency

How Kimi K2 approaches long-context understanding differently

The role of reinforcement learning in creating agentic capabilities

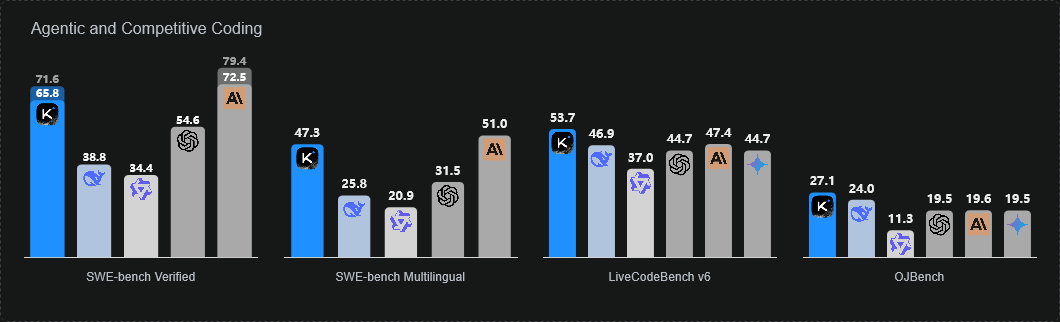

Benchmarking results and real-world performance implications

What this means for open-source AI development

Let's go!

Understanding Kimi K2's Architecture

The Mixture-of-Experts Foundation

At its core, Kimi K2 is built on a mixture-of-experts (MoE) architecture, featuring 32 billion activated parameters within a total model size of 1 trillion parameters . This design choice isn't arbitrary - it represents a careful balance between computational efficiency and model capability.

The MoE approach allows Kimi K2 to maintain the benefits of a massive parameter count while keeping inference costs manageable. During each forward pass, only a subset of the model's experts are activated, meaning that despite having a trillion parameters, the computational requirements remain closer to those of a 32-billion parameter dense model.

What's interesting about Kimi K2's implementation is how it handles expert routing. Unlike some MoE models that struggle with load balancing or expert collapse (where certain experts dominate while others remain underutilized), Kimi K2 appears to have solved these challenges through careful architectural design.

Scaling Laws and Parameter Efficiency

The decision to build a trillion-parameter model reflects Moonshot AI's belief in continued scaling benefits. While some researchers have argued that we're approaching diminishing returns from simply making models larger, Kimi K2 demonstrates that with the right architecture, scale continues to unlock new capabilities 32:1000 ratio between activated and total parameters is particularly aggressive compared to other MoE models. For context, models like Mixtral use a more conservative ratio. This aggressive sparsity suggests that Moonshot AI has developed novel techniques for maintaining model quality while pushing the boundaries of parameter efficiency.

Core Capabilities and Focus Areas

Long-Context Understanding

One of Kimi K2's defining features is its emphasis on long-context processing While the exact context window size isn't specified in the available information, the model's architecture suggests support for significantly longer sequences than typical transformer models.

Long-context capability isn't just about handling more tokens, it also fundamentally changes what's possible with language models. Applications like document analysis, multi-turn conversations, and complex reasoning tasks all benefit from extended context windows. Kimi K2's approach appears to go beyond simple positional encoding tricks, likely incorporating architectural innovations that maintain coherence across extended sequences.

Code Generation and Understanding

Code generation has quickly become one of the most - if not 𝘵𝘩𝘦 most successful - use case for current LLMs. Unsurprisingly, this is another area the creators of Kimi K2 have been focusing on. The model's training regime appears to have emphasized programming tasks, making it particularly suited for software development applications. This isn't about merely generating syntactically correct code and goes into understanding programming concepts, debugging existing code, and reasoning about software architecture.

The emphasis on code is strategic. As AI systems become more integrated into development workflows, models that excel at programming tasks have outsized impact. Kimi K2's approach suggests a deep understanding of this trend, with specialized optimizations for code-related tasks.

Advanced Reasoning Capabilities

Like any other big release in the last twelve months, Kimi K2 has been designed with reasoning as a first-class concern. This goes beyond simple pattern matching or information retrieval - the model demonstrates an ability to work through complex problems step-by-step.

The reasoning capabilities appear to be closely tied to the model's agentic behavior. Rather than simply responding to prompts, Kimi K2 can break down complex tasks, identify sub-problems, and work through solutions methodically.

The Agentic Showcase: Kimi-Researcher

Beyond Static Response Generation

Kimi-Researcher (their version of Deep Research) combines all of K2’s strengths and serves as a showcase for the model’s capabilities. Built on an internal version of the Kimi K-series models, it’s autonomously performing an average of 23 reasoning steps and exploring over 200 URLs per task.

The system has been trained using end-to-end agentic reinforcement learning, allowing it to develop advanced problem-solving strategies. The system can dynamically adjust its approach based on intermediate results, backtrack when necessary, and synthesize information from multiple sources.

The average of 23 reasoning steps per task mentioned above suggests that the model isn't just performing shallow searches but engaging in deep, recursive exploration of problem spaces on a regular basis. Each step builds on previous findings, creating a coherent research narrative.

Reinforcement Learning Innovation

Rather than relying on supervised fine-tuning with human-generated examples, Kimi-Researcher learns through trial and error, developing its own strategies for effective research. One of the big challenges of this approach are tasks with non-verifiable rewards (such as research), which can 𝘱𝘢𝘳𝘵𝘪𝘢𝘭𝘭𝘺 be addressed by utilizing the LLM-as-a-judge paradigm - in this case, as self-judging mechanism (image above).

The use of end-to-end reinforcement learning for training agentic capabilities has been heavily underutilized so far - and we will see much more of this in the near future. This approach has several advantages. First, it allows the model to discover strategies that humans might not have considered. Second, it creates a more robust system that can adapt to novel situations. Finally, it provides a pathway for continuous improvement - the model can continue learning from its experiences even after initial training.

Speculative Technical Innovations Under the Hood

Architectural Optimizations

Described as "a symphony of innovations," Kimi K2 comes with multiple technical advances that enable its impressive performance. While specific implementation details aren't publicly available 𝘺𝘦𝘵 (paper coming soon, they say), the model's capabilities suggest several key innovations:

The MoE routing mechanism must be highly sophisticated to manage a 1000:32 parameter ratio effectively. This likely involves dynamic routing algorithms that can adapt to different types of inputs, ensuring optimal expert utilization across diverse tasks.

Memory efficiency is another important consideration. With a trillion parameters, even storing the model requires careful optimization. Kimi K2 likely employs advanced quantization techniques, sparse storage formats, or other compression methods to make deployment feasible.

Training Innovations

Training a trillion-parameter model presents unique challenges. The computational requirements alone are staggering, but beyond raw compute, issues like training stability, gradient flow, and data efficiency become critical at this scale.

Kimi K2's successful training suggests advancements in several areas. The model likely employs complex parallelization strategies, possibly combining data, model, and pipeline parallelism in novel ways. Training stability at this scale also requires careful attention to initialization, learning rate schedules, and gradient clipping strategies.

Inference Optimization

Beyond training, making a trillion-parameter model practical for real-world use also requires significant inference optimizations. The MoE architecture provides a good foundation, but additional techniques are likely employed to further reduce computational requirements.

These might include dynamic computation graphs that skip unnecessary calculations, advanced caching mechanisms to reuse intermediate results, or hardware-specific optimizations that take advantage of modern accelerator architectures.

Practical Considerations for Adoption

Deployment Strategies

For organizations considering Kimi K2 adoption, several deployment strategies might proof viable. The MoE architecture makes distributed deployment particularly attractive, with different experts potentially hosted on different machines. This could enable cost-effective scaling for production applications.

Edge deployment presents another interesting possibility. While the full trillion-parameter model requires substantial resources, techniques like model distillation or expert pruning could create smaller versions suitable for edge devices while maintaining much of the original's capability.

For maximum benefit, organizations should consider how to leverage Kimi K2's specific strengths. Applications requiring long-context understanding, complex reasoning, or code generation are natural fits. The model's agentic capabilities also open new possibilities for autonomous systems that can handle complex, multi-step tasks with minimal human oversight.

Wrap Up

Kimi K2 is a clear statement that Chinese labs don’t intend to stop pushing out frontier open source LLMs. By combining massive scale with architectural innovation and a focus on practical capabilities, Moonshot AI has created a model that pushes the boundaries of what's possible while remaining accessible to the broader community.

Their emphasis on agentic behavior, demonstrated through Kimi-Researcher, points toward a future where everyday AI systems don't just respond to queries but actively engage with complex problems. The trillion-parameter scale, made practical through MoE architecture, shows that we haven't reached the limits of beneficial scaling.

And most importantly, Kimi K2's open-source release ensures that these advances benefit the wider community. In a field increasingly dominated by closed, commercial models, this commitment to openness is both refreshing and essential (even if one may argue that the real reasons for this aren’t altruistic at all). It enables researchers worldwide to build on these foundations, potentially accelerating progress in ways we can't yet imagine.

If Kimi K2 stands as a blueprint for what comes after the reasoning race: execution-first AI that doesn't just think but acts. We’ve seen this trend manifesting for months already, Moonshoot AI is just the first company putting things together in an efficient and publicly visible way.