🤔 Has OpenAI Lost Its Edge?

Claude-3.5 is faster, cheaper and more fun to use

Special anouncement

Drum roll… here’s a first pilot of a potential audio version of this newsletter. Same topics, lighter tone.

If enough people are interested in this, I will replace the male AI voice with myself in future episodes.

In this issue:

Anthropic’s new flagship model

Q* is out and OpenAI has nothing to do with it

Please, we don’t need another Devin

1. Introducing Claude 3.5 Sonnet

Watching: Claude-3.5-sonnet (model card)

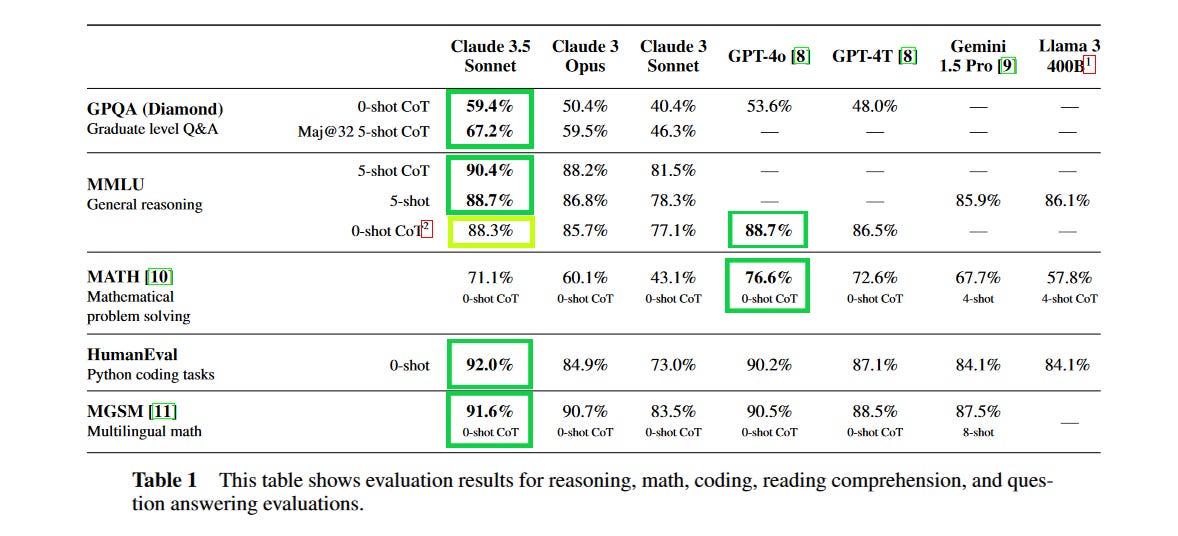

What problem does it solve? Claude 3.5 Sonnet, the latest iteration of Anthropic's AI assistant, addresses the need for more capable and efficient language models that can handle complex reasoning tasks, demonstrate broad knowledge, and excel in coding proficiency. It aims to provide a more nuanced understanding of language, humor, and intricate instructions while generating high-quality, relatable content. Additionally, it tackles the challenge of performing context-sensitive customer support and orchestrating multi-step workflows in a cost-effective manner.

How does it solve the problem? Claude 3.5 Sonnet achieves its impressive performance through a combination of architectural improvements and enhanced training data. By operating at twice the speed of its predecessor, Claude 3 Opus, it offers a significant performance boost while maintaining cost-effective pricing. The model's ability to independently write, edit, and execute code with sophisticated reasoning and troubleshooting capabilities is a result of its training on a diverse range of coding problems and its exposure to relevant tools. Its proficiency in code translations further enhances its effectiveness in updating legacy applications and migrating codebases.

What's next? As Claude 3.5 Sonnet sets new industry benchmarks in various domains, it opens up exciting possibilities for future applications. Since it’s also much faster and cheaper than Opus, the model might become feasible for use cases that it previously was too slow and/or expensive for. My personal favorite, however, is the new artifact feature that makes it much easier to interate on - and generally, work with - structured model outputs. All structured outputs created by Claude-3.5 will be saved as files and stored in the conversation history.

2. Q*: Improving Multi-step Reasoning for LLMs with Deliberative Planning

Watching: Q* (paper)

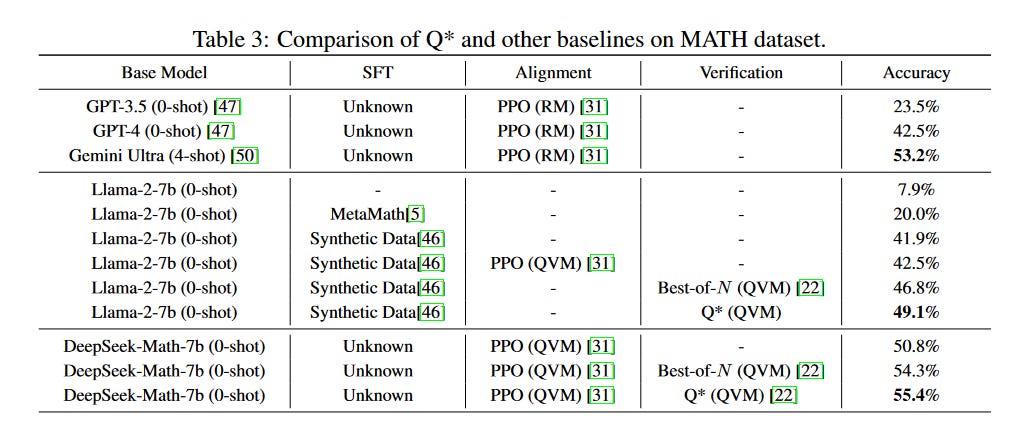

What problem does it solve? Large Language Models (LLMs) have shown remarkable performance across a wide range of natural language tasks. However, their auto-regressive generation process can lead to errors, hallucinations, and inconsistencies, especially when dealing with multi-step reasoning tasks. This pathology can limit the reliability and usefulness of LLMs in real-world applications that require accurate and coherent outputs.

How does it solve the problem? The researchers introduce Q*, a general and versatile framework that guides the decoding process of LLMs using deliberative planning. Q* learns a plug-and-play Q-value model that serves as a heuristic function to select the most promising next step during generation. By doing so, Q* effectively steers LLMs towards more accurate and consistent outputs without the need for fine-tuning the entire model for each specific task. This approach avoids the significant computational overhead and potential performance degradation on other tasks that can occur with fine-tuning.

What's next? The Q* framework has demonstrated its effectiveness on several benchmark datasets, including GSM8K, MATH, and MBPP. However, it would be interesting to see how well this approach generalizes to other types of tasks and domains beyond mathematical reasoning and programming. Additionally, future research could explore the integration of Q* with other techniques to further improve the quality and robustness of LLM outputs.

3. Code Droid: A Technical Report

Watching: Code Droid (report)

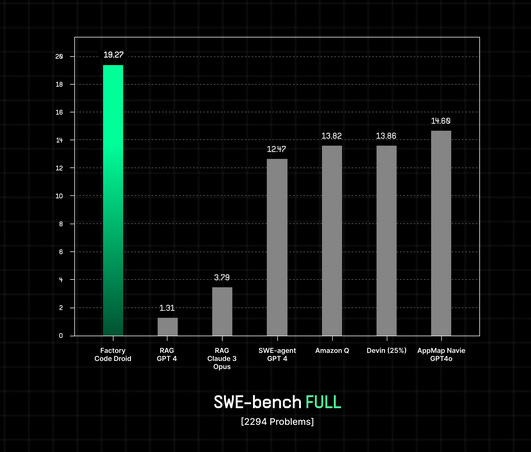

What problem does it solve? Software development is a complex and time-consuming process that requires skilled human developers. As software systems become increasingly large and intricate, the demand for developer resources continues to grow. Factory aims to address this challenge by creating autonomous systems called Droids that can accelerate software engineering velocity. These Droids are designed to model the cognitive processes of human developers, adapted to the scale and speed required in modern software development.

How does it solve the problem? Factory's way of building Droids (=Agents) involves an interdisciplinary approach that draws from research on human and machine decision-making, complex problem-solving, and learning from environments. The Code Droid, in particular, has demonstrated state-of-the-art performance on SWE-bench, a benchmark for evaluating software engineering capabilities. It achieved 19.27% on the full SWE-bench and 31.67% on the lite version, indicating its ability to handle a wide range of software engineering tasks efficiently.

What's next? As Factory continues to develop and refine their Droids, we can expect to see further improvements in their performance on software engineering tasks. The ultimate goal is to create autonomous systems that can significantly accelerate software development processes, allowing human developers to focus on higher-level tasks and strategic decision-making. I just really, really wish this won’t turn into another Devin-level letdown.

Papers of the Week:

RichRAG: Crafting Rich Responses for Multi-faceted Queries in Retrieval-Augmented Generation

Samba: Simple Hybrid State Space Models for Efficient Unlimited Context Language Modeling

DeepSeek-Coder-V2: Breaking the Barrier of Closed-Source Models in Code Intelligence

VisualRWKV: Exploring Recurrent Neural Networks for Visual Language Models

Since Claude 3, GPT has lagged behind in programming and writing. It's just that GPT came out first and captured the market. If GPT-5 doesn't have a significant improvement, the market will eventually be taken over by Claude.

Love the format of this SS. Thanks