🤔 Cogito ergo sum()

Exploring the power of hallucinations

In this issue:

Learning from failures

Leveraging hallucinations to improve accuracy

LLMs for Neurosymbolic Reasoning

Want to support me going professional as a content creator? Pledge now for future additional content. Your pledge will help me plan ahead and improve my content.

1. ReFT: Reasoning with Reinforced Fine-Tuning

Watching: ReFT (paper)

What problem does it solve? Current fine-tuning approaches for LLMs often involve Chain-of-Thought (CoT) annotations to enhance reasoning capabilities. One of the limitations of this approach, especially noticeable in tasks like math problem solving, is the lack of strong generalization due to reliance on singular annotated reasoning paths per problem in the training data. This can lead to models that perform well on training data but fail to generalize to novel, untrained examples. Ensuring that LLMs learn from a diversity of reasoning paths is important for robust and flexible problem solving.

How does it solve the problem? Reinforced Fine-Tuning (ReFT) addresses this generalization challenge through a two-stage process. The method starts with Supervised Fine-Tuning (SFT) to give the model a strong initial understanding of the task. Following this, ReFT applies reinforcement learning, using the Proximal Policy Optimization (PPO) algorithm, to diversify the reasoning paths the model explores. Instead of being confined to the limited annotated paths, the model samples a variety of paths and receives feedback based on the correctness of the answers. This stochastic training method encourages the model to discover and learn from multiple pathways to problem-solving, which can greatly enhance its ability to generalize beyond the training data.

What’s next? The success of ReFT in math problem-solving sets the stage for broader application in other reasoning-heavy tasks. The ability of the model to learn from identical questions but through diverse reasoning routes is a notable stride toward generalizability, something that has been a formidable challenge in LLM training. Future research can look into adapting ReFT to other domains and integrating it with inference-time strategies like majority voting and re-ranking.

2. Large Language Models are Null-Shot Learners

Watching: Null-Shot Learning (paper)

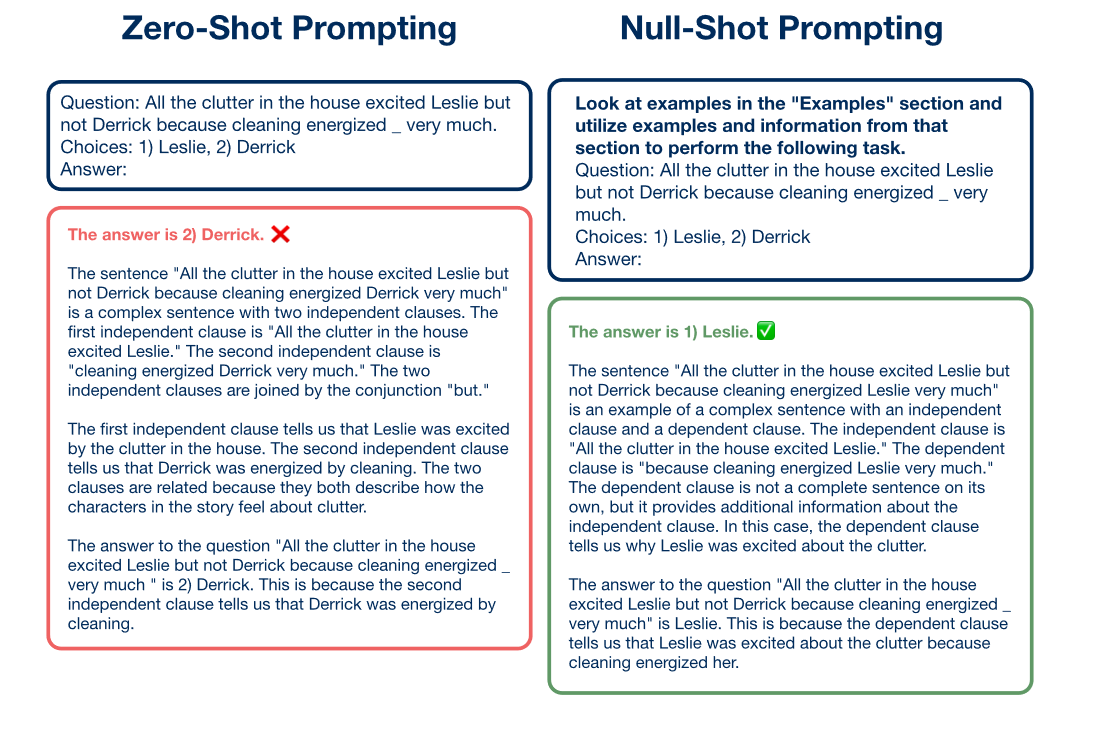

What problem does it solve? The phenomenon of hallucination, where Large Language Models (LLMs) generate factually incorrect or ungrounded information, has posed a challenge for zero-shot learning, where models need to perform tasks without fine-tuning on specific examples. Null-shot prompting adopts a counterintuitive strategy by embracing hallucination to improve LLM performance on tasks like reading comprehension and arithmetic reasoning, overcoming some of the limitations of zero-shot prompting.

How does it solve the problem? Null-shot prompting directs the LLM to reference a non-existent "Examples" section, which paradoxically encourages the model to draw on its internal knowledge to generate more accurate or relevant responses. This method banks on the model's capacity for imaginative synthesis, effectively tricking it into producing more useful information when approaching tasks it has not been finetuned for.

What’s next? Assessing the degree of hallucination inherent in different LLMs could become a new diagnostic tool, courtesy of null-shot prompting's unexpected utility. If the gains observed in preliminary studies hold up, it could usher in a subtle shift in how we approach LLM training and task performance evaluation. Additionally, integrating null-shot techniques with existing methodologies, especially to handle more complex reasoning tasks, could be a worthwhile strand of LLM development to explore, blurring the lines between perceived model weaknesses and potential strengths.

3. Large Language Models Are Neurosymbolic Reasoners

Watching: LLMs for Neurosymbolic Reasoning (paper)

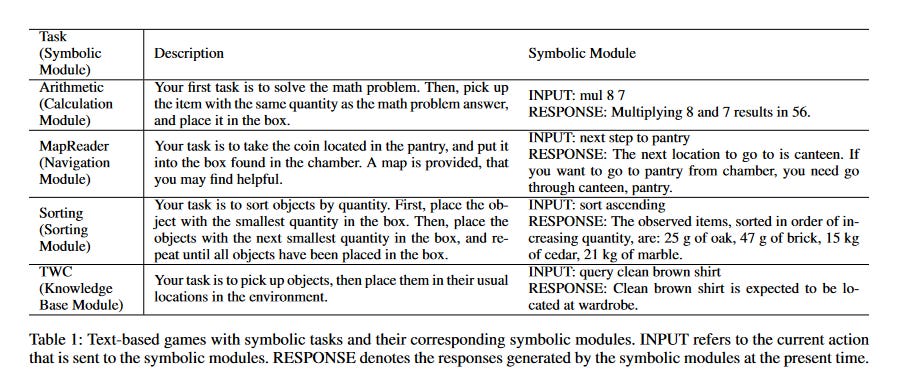

What problem does it solve? Symbolic reasoning, which involves understanding and manipulating symbols and structured concepts, is crucial for a range of applications including text-based games that serve as benchmarks for artificial intelligence. These games often present real challenges in areas such as mathematics, logic puzzles, and navigation, which require a combination of NLP and symbolic reasoning skills. Present-day Large Language Models (LLMs) are predominantly leveraging patterns found in data without explicit symbolic manipulation capabilities. The challenge lies in enhancing LLMs to effectively handle tasks that necessitate a strong capability for symbolic reasoning within text-based game environments.

How does it solve the problem? To address the challenge, the paper proposes tailoring an agent based on an LLM specifically for symbolic reasoning tasks. This is achieved by integrating a symbolic module that allows the LLM to process observations and valid actions from the game environments. By initializing the LLM agent with knowledge of its role and equipping it with this symbolic computational capacity, the agent can utilize both its natural language processing abilities and symbolic reasoning to select and perform actions that advance in-game objectives. This combination illustrates a move towards a more hybrid AI approach, where statistical learning and symbolic reasoning are orchestrated to handle complex tasks.

What’s next? The impressive 88% average performance across various tasks signals a promising pathway for the utilization of LLMs in symbolic reasoning applications. The next steps involve refining and scaling this methodology for more complex environments and exploring the agent's limitations and potential biases within these symbolic tasks. The future may involve extending such hybrid models beyond gaming to other real-world symbolic tasks, such as automated theorem proving, software program synthesis, or advanced knowledge representation.

Papers of the Week:

RAG vs Fine-tuning: Pipelines, Tradeoffs, and a Case Study on Agriculture

RecRanker: Instruction Tuning Large Language Model as Ranker for Top-k Recommendation

Generative Multi-Modal Knowledge Retrieval with Large Language Models

Anchor function: a type of benchmark functions for studying language models

DAPT: A Dual Attention Framework for Parameter-Efficient Continual Learning of Large Language Models