👁️🗨️ Attention Is All Graphs Need

Special edition with two bonus highlights and intense insights

In this issue:

Attention might be all you need - even for graphs

Mamba-2 is coming for multi-modal Transformers

A Survey on Prompt Engineering for NLP

Cost-effective Hallucination Detection

Continual Knowledge Learning in LLMs

As you may have noticed, this week contains two bonus highlights. I conducted a poll in last week’s edition about how many highlight sections readers would like to see.

So here’s a special edition with 5 instead of 3 research highlights. Hope you enjoy it.

1. Masked Attention is All You Need for Graphs

Watching: MAG (paper)

What problem does it solve? Graph Neural Networks (GNNs) have become the go-to approach for learning on graph-structured data, thanks to their flexibility, efficiency, and good performance. However, designing powerful and general-purpose GNNs often requires significant research efforts and relies on carefully handcrafted message passing operators. This can be time-consuming and may not always result in the most optimal solution for a given graph learning task.

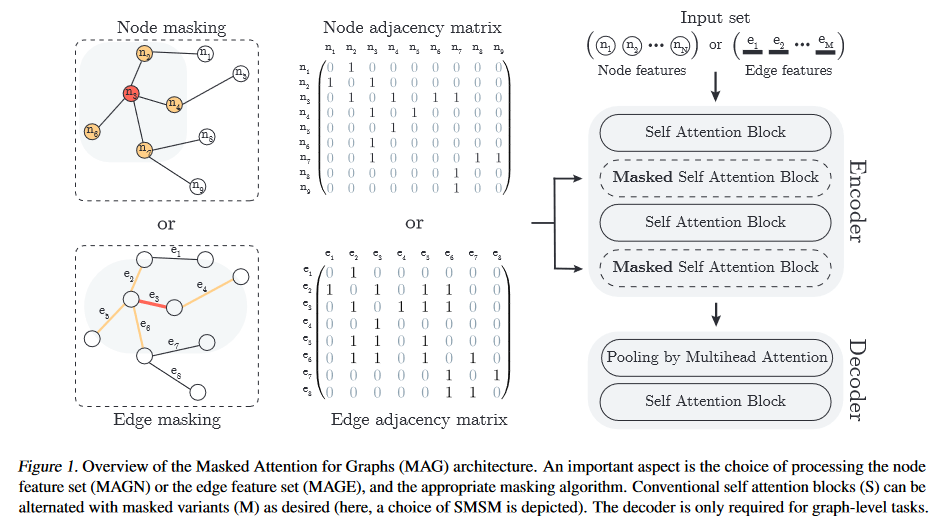

How does it solve the problem? Masked Attention for Graphs (MAG) offers a simple yet effective alternative to traditional GNNs. Instead of relying on complex message passing operators, MAG represents graphs as node or edge sets and enforces connectivity by masking the attention weight matrix. This creates custom attention patterns for each graph, allowing the model to learn from the graph structure directly. Despite its simplicity, MAG achieves state-of-the-art performance on long-range tasks and outperforms strong message passing baselines and more involved attention-based methods on over 55 node and graph-level tasks.

What's next? The success of MAG opens up new possibilities for graph learning. Its sub-linear memory scaling in the number of nodes or edges enables learning on dense graphs and future-proofs the approach. Additionally, MAG demonstrates significantly better transfer learning capabilities compared to GNNs, which could be further explored and applied to various domains. It will be interesting to see how MAG and similar attention-based approaches can be extended and combined with other techniques to tackle even more complex graph learning challenges.

2. ML-Mamba: Efficient Multi-Modal Large Language Model Utilizing Mamba-2

Watching: ML-Mamba (paper/code)

What problem does it solve? Multimodal Large Language Models (MLLMs) have shown impressive capabilities in tasks that involve both language and vision. However, the Transformer architecture that most of these models are based on, comes with significant computational overhead that scales quadratically with the input size. This makes it challenging to deploy MLLMs in real-world applications where inference speed and efficiency are critical.

How does it solve the problem? ML-Mamba addresses the computational challenges of MLLMs by leveraging the Mamba-2 model, which is known for its linear complexity and fast processing of long sequences. By replacing the Transformer-based backbone with a pre-trained Mamba-2 model, ML-Mamba achieves competitive performance on various multimodal benchmark tests while significantly reducing the inference time. The authors also explore methods for integrating 2D visual selective scanning mechanisms and experiment with different visual encoders and Mamba-2 model variants to optimize the model's performance.

What's next? The results of ML-Mamba highlight the potential of state space models, such as Mamba-2, in multimodal tasks. By achieving performance comparable to state-of-the-art methods like TinyLaVA and MobileVLM v2 while being more efficient, ML-Mamba opens up new possibilities for deploying MLLMs in real-world applications. As the field of multimodal learning continues to evolve, it will be interesting to see how other researchers build upon the ideas presented in this paper and further improve the efficiency and effectiveness of MLLMs.

3. A Survey of Prompt Engineering Methods in Large Language Models for Different NLP Tasks

Watching: Prompt Engineering (paper)

What problem does it solve? Prompt engineering has emerged as a powerful technique to extract knowledge from Large Language Models (LLMs) without the need for extensive fine-tuning or re-training. By crafting carefully designed natural language instructions, or prompts, users can elicit structured and relevant information from LLMs. This approach democratizes access to LLMs, enabling even those without a deep mathematical machine learning background to experiment with and leverage the capabilities of these models.

How does it solve the problem? Researchers have developed a wide range of prompting techniques tailored to specific Natural Language Processing (NLP) tasks. These techniques aim to improve the accuracy and relevance of the information extracted from LLMs. The survey paper summarizes 39 different prompting methods across 29 NLP tasks, providing a comprehensive overview of the current state-of-the-art. By categorizing these methods based on the NLP tasks they address and highlighting their performance on various datasets, the survey offers valuable insights into the effectiveness of different prompting strategies.

What's next? As prompt engineering continues to gain popularity, we can expect further advancements and refinements in prompting techniques. Researchers will likely explore new ways to optimize prompts for specific NLP tasks, leveraging insights from the surveyed methods. Additionally, the development of more user-friendly tools and frameworks for prompt engineering could further democratize access to LLMs and enable a wider range of applications. On the other hand, more automatic ways of Prompt Engineering have been emerging lately (e.g., DSPy, TextGrad). It’s not clear yet how important manual Prompt Engineering wil continue to remain.

4. Cost-Effective Hallucination Detection for LLMs

Watching: Hallucination Detection (paper)

What problem does it solve? Hallucinations in Large Language Models (LLMs) are a significant challenge, particularly in production settings where reliability and consistency are crucial. Hallucinations can manifest as outputs that are unfaithful to the input, inconsistent with external facts, or internally inconsistent. This issue can lead to misinformation and erode trust in LLM-based systems. Detecting and mitigating hallucinations is essential for ensuring the trustworthiness and practical utility of LLMs in real-world applications.

How does it solve the problem? The proposed pipeline for hallucination detection consists of three key steps. First, a confidence score is generated to indicate the likelihood of a generated answer being a hallucination. Second, the score is calibrated based on attributes of the input and candidate response, ensuring that the score is context-aware and risk-sensitive. Finally, hallucination detection is performed by thresholding the calibrated score. The authors benchmark various state-of-the-art scoring methods across different datasets and LLMs, highlighting the importance of calibration for effective decision-making. They also introduce a multi-scoring framework that combines different scores to achieve top performance across all datasets, as well as a cost-effective variant that reduces computational overhead while maintaining high accuracy.

What's next? The findings of this study have significant implications for the development and deployment of LLMs in production settings. The proposed multi-scoring framework and its cost-effective variant offer promising solutions for mitigating hallucinations and ensuring the reliability of LLM-based systems. Future research could explore the integration of these techniques into existing LLM architectures, as well as the development of more sophisticated scoring methods and calibration techniques.

5. Train-Attention: Meta-Learning Where to Focus in Continual Knowledge Learning

Watching: Train-Attention (paper)

What problem does it solve? Continual learning, the ability to incrementally acquire new knowledge over time, is a fundamental challenge in AI and machine learning. When neural networks, including Large Language Models (LLMs), are trained on new data, they often suffer from catastrophic forgetting - the tendency to rapidly lose previously learned knowledge. Existing approaches to mitigate this issue, such as regularization, architectural changes, and rehearsal methods, often inherit the inefficiencies of standard training procedures, leading to unnecessary parameter updates and increased forgetting.

How does it solve the problem? TAALM (Train-Attention-Augmented Language Model) addresses the challenges of continual knowledge learning (CKL) in LLMs by introducing a novel meta-learning framework. This approach dynamically predicts and applies weights to tokens based on their usefulness, allowing for more targeted knowledge updates and minimizing forgetting. By optimizing token importance predictions, TAALM enhances learning efficiency and effectively balances the acquisition of new knowledge with the retention of previously learned information. Additionally, the authors propose a new benchmark, LAMA-ckl, to better evaluate the trade-off between learning and retaining knowledge in CKL scenarios.

What's next? The introduction of TAALM and the LAMA-ckl benchmark opens up new avenues for research in continual knowledge learning for LLMs. Future work could explore the integration of TAALM with other CKL approaches, as the authors have shown that their method is synergistically compatible with existing techniques. Furthermore, the meta-learning framework employed by TAALM could be extended to other domains beyond language modeling, potentially benefiting a wide range of applications that require continual learning capabilities.

Papers of the Week:

LLMExplainer: Large Language Model based Bayesian Inference for Graph Explanation Generation

Keep the Cost Down: A Review on Methods to Optimize LLM' s KV-Cache Consumption

A Generic Review of Integrating Artificial Intelligence in Cognitive Behavioral Therapy

ByteCheckpoint: A Unified Checkpointing System for LLM Development

Concise Thoughts: Impact of Output Length on LLM Reasoning and Cost