In this issue:

Agents doing time series analysis

Seamless migration from LLMs to SLMs

Fitting your whole codebase into context

1. Agentic Retrieval-Augmented Generation for Time Series Analysis

Watching: Agentic RAG for TSA (paper)

What problem does it solve? Time series analysis is a challenging domain due to the complex spatio-temporal dependencies and distribution shifts that can occur when learning from historical context to predict task-specific outcomes. Traditional approaches often struggle to effectively capture these intricate patterns and adapt to new data, leading to suboptimal performance. The proposed agentic Retrieval-Augmented Generation (RAG) framework aims to address these limitations by leveraging a hierarchical, multi-agent architecture and specialized sub-agents.

How does it solve the problem? The proposed framework employs a master agent that orchestrates specialized sub-agents, each equipped with smaller, pre-trained language models (SLMs) fine-tuned for specific time series tasks. These sub-agents retrieve relevant prompts from a shared repository of prompt pools containing distilled knowledge about historical patterns and trends. By leveraging this retrieved knowledge, the sub-agents can improve their predictions on new data, effectively adapting to distribution shifts and capturing complex spatio-temporal dependencies. The modular, multi-agent design allows for flexibility and task-specific customization, enabling the framework to tackle a wide range of time series analysis challenges.

What's next? The agentic RAG approach has demonstrated state-of-the-art performance across major time series tasks, outperforming task-specific customized methods on benchmark datasets. This suggests that the framework has the potential to be applied to various real-world time series analysis problems, such as demand forecasting, anomaly detection, and predictive maintenance. Future research could explore the scalability of the approach to even larger and more complex time series datasets, as well as the integration of additional sub-agents with specialized knowledge for specific domains or industries. Whether this will actually be a robust and efficient approach will have to be validated in practice.

2. LlamaDuo: LLMOps Pipeline for Seamless Migration from Service LLMs to Small-Scale Local LLMs

Watching: LlamaDuo (paper/code)

What problem does it solve? While cloud-based Large Language Models (LLMs) have become increasingly popular, they come with a set of challenges. These include operational dependencies on the cloud provider, privacy concerns due to the need to send sensitive data to the cloud, and the requirement for continuous internet connectivity. LlamaDuo aims to address these issues by providing a pipeline for migrating knowledge and capabilities from cloud-based LLMs to smaller, locally manageable models.

How does it solve the problem? LlamaDuo involves a two-step process. First, a smaller language model is fine-tuned using a synthetic dataset generated by the cloud-based LLM. If the performance of this fine-tuned model is not satisfactory, it undergoes further fine-tuning using additional similar data created by the service LLM. This iterative process ensures that the smaller model can eventually match or even surpass the capabilities of the cloud-based LLM for specific downstream tasks. By enabling the migration of knowledge to a local model, LlamaDuo reduces operational dependencies, addresses privacy concerns, and allows for offline usage.

What's next? The LlamaDuo pipeline offers a promising solution for managing AI deployments in constrained environments, such as those with strict privacy policies or limited internet connectivity. Further research could focus on optimizing the iterative fine-tuning process to reduce the computational resources required and improve the efficiency of knowledge transfer from cloud-based LLMs to smaller, locally manageable models.

3. 100M Token Context Windows

Watching: HashHop (blog)

What problem does it solve? Current long-context evaluations for language models have subtle flaws that allow models to perform well without truly demonstrating the ability to store and retrieve information from ultra-long contexts. For example, the popular "Needle in a Haystack" evaluation places a random fact in the middle of a long context, but the unusual nature of this "needle" allows models to ignore otherwise relevant information. Some benchmarks even explicitly signal the location of the key information. These issues weaken the evaluations and don't adequately test models' long-term memory capabilities.

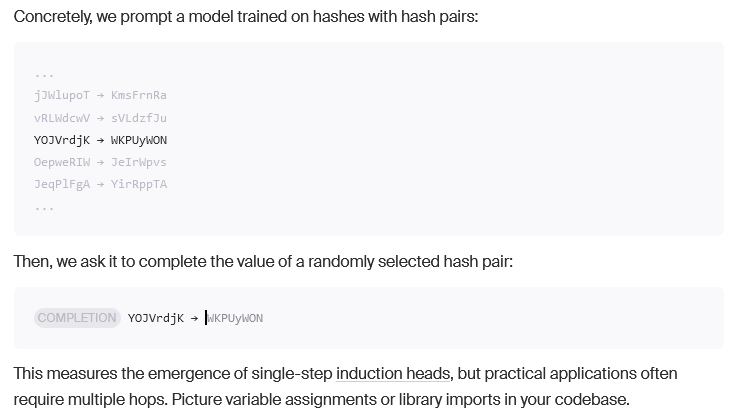

How does it solve the problem? To address the limitations of current long-context evaluations, the researchers propose a new benchmark called HashHop. HashHop uses random, incompressible hashes as the key-value pairs in the context, requiring models to store and retrieve the maximum possible information content. Models are prompted with hash pairs and asked to complete the value for a randomly selected hash. HashHop also incorporates multi-hop chains, where models must follow a sequence of hashes to arrive at the final value. This tests models' ability to perform complex reasoning over the entire context. The hash pairs are shuffled to ensure order- and position-invariance.

What's next? The researchers at Magic have trained their first 100M token context model, LTM-2-mini, which can handle contexts equivalent to 10 million lines of code or 750 novels. LTM-2-mini's sequence-dimension algorithm is significantly more efficient than the attention mechanism in large language models like Llama 3.1 405B. When trained on hashes with chain of thought, LTM-2-mini demonstrates strong performance on the HashHop benchmark, maintaining high recall even with 100M token contexts and multiple hops. The researchers also trained a prototype model on text-to-diff data, showing promising early results for code synthesis with ultra-long contexts. Further scaling and refinement of these LTM architectures could lead to breakthrough capabilities in software development and other domains that benefit from vast knowledge retrieval.

Papers of the Week:

DOMAINEVAL: An Auto-Constructed Benchmark for Multi-Domain Code Generation

Boosting Lossless Speculative Decoding via Feature Sampling and Partial Alignment Distillation

Artificial intelligence for science: The easy and hard problems

SciLitLLM: How to Adapt LLMs for Scientific Literature Understanding

Jina-ColBERT-v2: A General-Purpose Multilingual Late Interaction Retriever