🕵️ Agents Everywhere

Will agents become widely useful in 2024?

In this issue:

LLM Agents for smartphones

LLM Agents for literally everything

LLM inference going crazy on local machines

Want to support me going professional as a content creator? Pledge now for future additional content. Your pledge will help me plan ahead and improve my content.

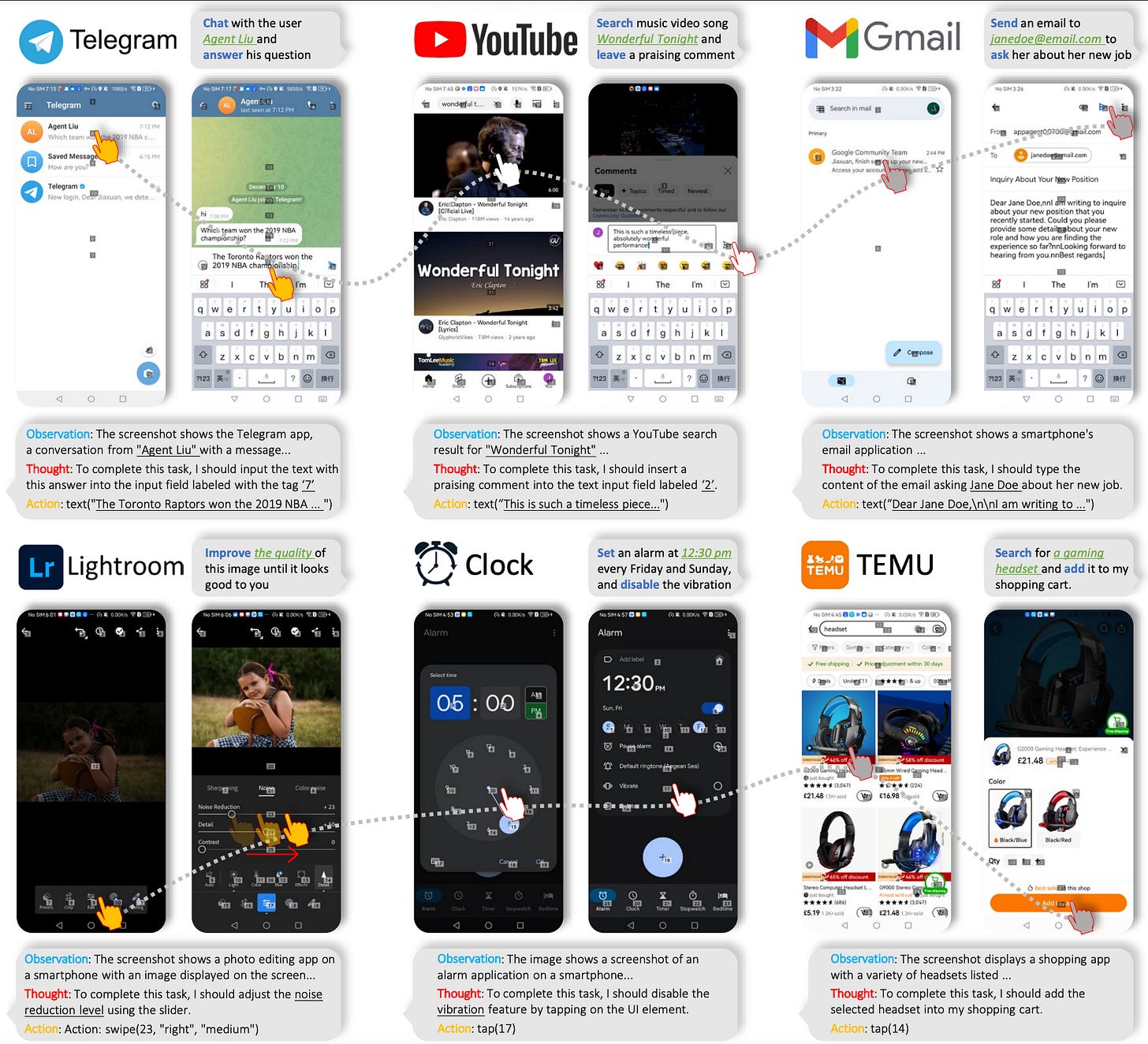

1. AppAgent: Multimodal Agents as Smartphone Users

Watching: AppAgent (paper/code)

What problem does it solve? The proliferation of smartphone apps and their diverse functionalities pose a significant challenge for automating interactions such as tapping, swiping, and navigating through the apps' interfaces. Traditional automation methods often require back-end access or specific programming for each application, which is not scalable. The paper addresses this issue by enabling an LLM-based multimodal agent to operate smartphone applications in a human-like manner without the need for direct system back-end access. This increases the potential for widespread automation across a variety of apps, making it more accessible and adaptable.

How does it solve the problem? The proposed agent framework mimics human interaction patterns and learns to operate smartphone applications through autonomous exploration or by observing human demonstrations. This learning approach helps create a knowledge base that the agent can consult to spontaneously execute tasks across different applications. By simulating human-like gestures and interactions, the agent acts within a simplified action space, which removes the necessity to adapt the system for each specific application, thus making the framework highly adaptable to the existing ecosystem of smartphone apps.

What’s next? After successful demonstrations of the agent's effectiveness across various high-level tasks in multiple smartphone applications, research will likely explore broader testing, use-case scenarios, and potential integration with assistive technologies, which could significantly impact how users interact with their devices and facilitate accessibility for users with different needs.

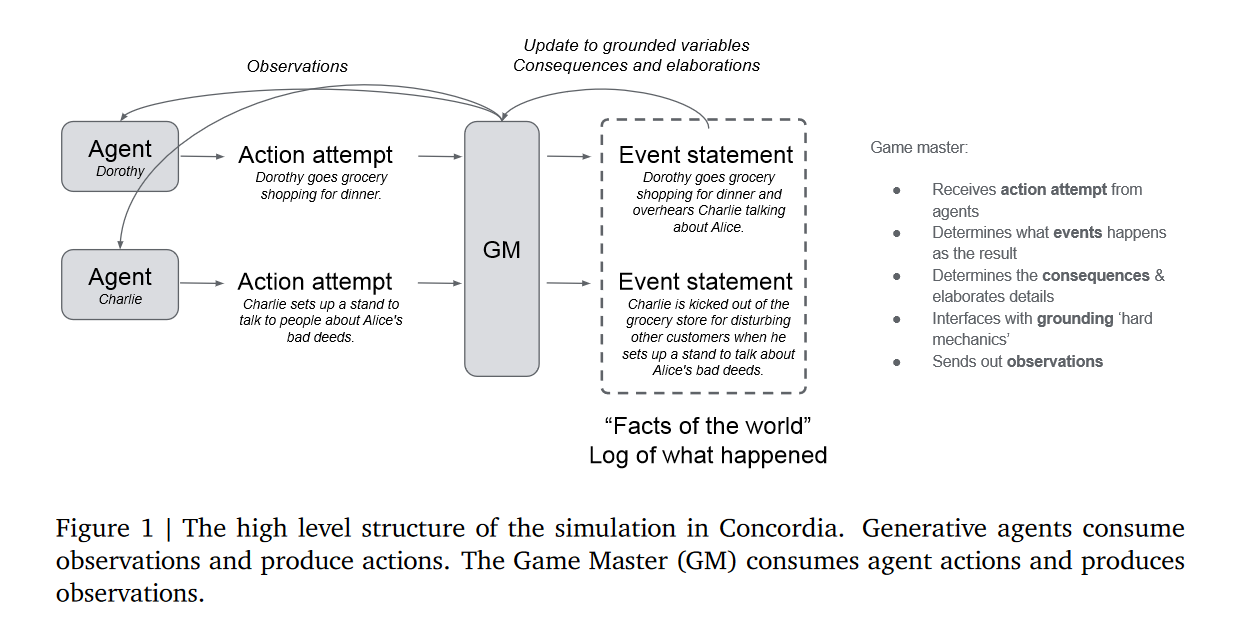

2. Generative agent-based modeling with actions grounded in physical, social, or digital space using Concordia

Watching: Concordia (paper/code)

What problem does it solve? Agent-based modeling (ABM) is a simulation technique used within various disciplines to explore the actions of agents and their effects on the system as a whole. The innovation lies in coupling ABM with the cognitive and linguistic capabilities of Large Language Models (LLMs) to create Generative Agent-Based Models (GABMs). These GABMs enable agents to reason, communicate, and interact with both physical and digital environments in a way that mimics human-like understanding and behavior. This approach can be transformative for scientific research and in evaluating the performance of digital services through more realistic simulations.

How does it solve the problem? The Concordia library is introduced to bridge ABMs with LLMs, facilitating the creation of GABMs where agents operate with human-like common sense and communicate using natural language. Both physical and digital environments can be simulated, with agents generating behaviors through LLM calls and associative memory. Notably, a "Game Master" (GM) agent orchestrates the simulation, translating natural language actions into physical actions within the model or API calls in digital simulations. This way, Concordia enables complex, language-driven interactions and responses, expanding the utility of ABMs significantly.

What’s next? The integration of LLMs into agent-based simulations opens an exciting frontier for both scientific exploration and practical applications. Concordia's potential to simulate complex interactions paves the way for advanced studies in behavior and decision-making, as well as the potential for testing and improving digital services with synthetic user data. Moving forward, further development in this area could lead to more sophisticated simulations that better mimic real-world complexity and eventually improve the design and deployment of AI-driven applications and services.

3. PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU

Watching: PowerInfer (paper/code)

What problem does it solve? PowerInfer addresses the critical issue of efficiently performing inference tasks for Large Language Models (LLMs) on personal computers with consumer-grade GPUs. The main challenge it tackles is the high GPU memory requirement and computational demand that largescale models place on standard PC hardware, which typically limits the deployment of state-of-the-art LLMs to high-end servers.

How does it solve the problem? By observing the power-law distribution of neuron activations during LLM inference, PowerInfer optimizes the utilization of PC hardware through a hybrid GPU-CPU approach. Hot neurons, which are highly active across different inputs, are pre-loaded on the GPU to take advantage of its speed. Meanwhile, cold neurons, which are less frequently activated, are processed on the CPU. This balance lessens the burden on GPU memory and minimizes data transfer between the GPU and CPU, while adaptative predictors and neuron-aware sparse operators further enhance computational efficiency.

What’s next? Seeing PowerInfer near the performance of a top-tier server GPU like the A100 on a consumer-grade RTX 4090 is quite promising, suggesting that sophisticated LLMs could become more accessible to individual users and small enterprises. With further refinement, we can anticipate broader adoption of LLMs across various fields, which will likely spark further innovations in both model development and hardware optimization for AI workloads.

Papers of the Week:

GraphGPT: Graph Instruction Tuning for Large Language Models

A Challenger to GPT-4V? Early Explorations of Gemini in Visual Expertise

A Revisit of Fake News Dataset with Augmented Fact-checking by ChatGPT

How to Prune Your Language Model: Recovering Accuracy on the "Sparsity May Cry'' Benchmark