👁️ Agent-ception: When Agents Are Creating Agents

And NVIDIA's new approach to model training

Foreword

I've started a new format called "Executive Summaries", where I'll take the time to guide you through particularly relevant research papers in a concise yet thorough manner. As always, I'll focus on what's most important without overwhelming you with unnecessary details.

The name "Executive Summaries" was chosen because I believe that everyone supporting me on this journey deserves the best possible overview delivered quickly and effectively. Whenever a particularly interesting paper is published and I recognize its significance, I'll promptly dive into it and provide a comprehensive summary for you.

This will be the first paid feature of my substack and I’ll be experimenting with both format and frequency. The first executive summary will be sent out tomorrow, including a preview for free readers.

Last but not least, a big thank you to all of my subscribers - especially those that already support me with their upgraded subscription. Every little bit helps and enables me to invest more time into creating content for you!

In this issue:

NVIDIA’s new approach to model training

Survey on graph-based retrieval

Agent-ception: when agents are creating agents

1. LLM Pruning and Distillation in Practice: The Minitron Approach

Watching: Pruning and Distillation (paper)

What problem does it solve? Large Language Models (LLMs) have shown remarkable performance across various natural language tasks. However, their massive size poses challenges in terms of computational resources and deployment costs. Model compression techniques, such as pruning and distillation, aim to reduce the model size while maintaining performance, making LLMs more accessible and efficient.

How does it solve the problem? The researchers explore two pruning strategies to compress the Llama 3.1 8B and Mistral NeMo 12B models. Depth pruning reduces the number of layers in the model, while joint hidden/attention/MLP (width) pruning reduces the dimensionality of the hidden states, attention mechanisms, and MLPs. The pruned models are then fine-tuned using distillation, where a larger "teacher" model transfers its knowledge to a smaller "student" model. The authors also found that slightly fine-tuning the teacher models on the distillation dataset, even without access to the original training data, improves the overall performance.

What's next? The compressed models, particularly the Mistral-NeMo-Minitron-8B (MN-Minitron-8B) derived from Mistral NeMo 12B, demonstrate state-of-the-art performance while being significantly smaller than their original counterparts. The open-sourcing of these compressed model weights on Hugging Face with a permissive license will enable researchers and practitioners to build upon this work and deploy efficient LLMs in various applications. Future research could explore the combination of pruning and distillation with other compression techniques, such as quantization, to further reduce model size without compromising performance.

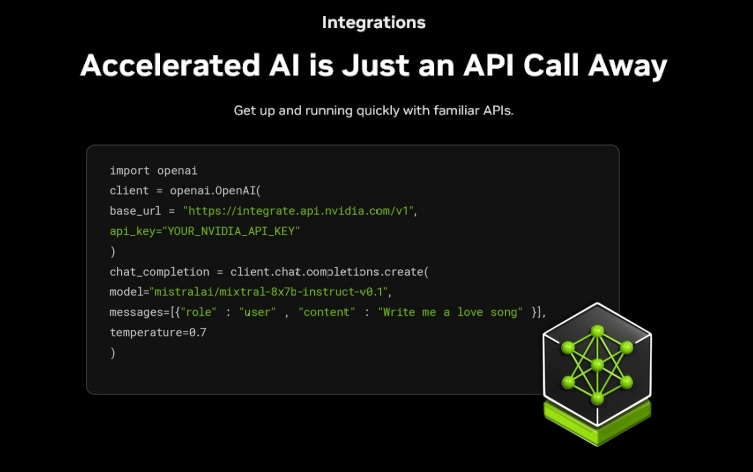

If you haven’t heard about NVIDIA NIM already, it’s their very own model catalog that allows you to select state-of-the-art models, optimized and hosted by NVIDIA.

Using NVIDIA NIM is very intuitive, as it directly integrates with popular toolkits such as the OpenAI library and they’re commited to building an end-to-end ecosystem that can carry you through the whole cycle of a project, start to finish.

There’s a lot of stuff coming that I can’t talk about yet, but if you want to get familiar with their solutions, there has never been a better time than now.

If you’re a sponsor and you want to see your product here, contact me on Linkedin or directly on Substack.

2. Graph Retrieval-Augmented Generation: A Survey

Watching: Graph RAG (paper)

What problem does it solve? Retrieval-Augmented Generation (RAG) has emerged as a promising approach to enhance the performance of Large Language Models (LLMs) without the need for retraining. By leveraging external knowledge bases, RAG addresses common issues faced by LLMs, such as generating false or inconsistent information ("hallucination"), lacking domain-specific knowledge, and relying on outdated information. However, effectively utilizing the complex relationships among entities in databases remains a challenge for traditional RAG systems.

How does it solve the problem? Graph-based RAG (Graph RAG) addresses the limitations of traditional RAG by incorporating structural information across entities, enabling more precise and comprehensive retrieval of relevant knowledge. By capturing the relational knowledge within the database, Graph RAG facilitates the generation of more accurate and context-aware responses. The Graph RAG workflow consists of three key stages: Graph-Based Indexing, which organizes the knowledge base into a graph structure; Graph-Guided Retrieval, which utilizes the graph to efficiently retrieve relevant information; and Graph-Enhanced Generation, which incorporates the retrieved knowledge into the language model's output.

What's next? Future directions may include developing more advanced graph representation techniques to capture complex relationships, exploring alternative retrieval strategies to improve efficiency and effectiveness, and investigating new training methods to better integrate the retrieved knowledge into the language model. Additionally, expanding the application of Graph RAG to a wider range of downstream tasks and domains, as well as establishing standardized evaluation methodologies, will be crucial for advancing the field and facilitating the adoption in real-world scenarios.

3. Automated Design of Agentic Systems

Watching: ADAS (paper)

What problem does it solve? While Foundation Models have shown impressive capabilities, they are often used as modules within larger agentic systems that are hand-designed by researchers. This manual design process can be time-consuming and may not always result in the most optimal or robust solutions. The field of Automated Design of Agentic Systems (ADAS) aims to address this issue by automatically creating powerful agentic system designs, potentially discovering novel building blocks and architectures that surpass human-designed agents.

How does it solve the problem? The researchers propose a promising approach within ADAS where agents are defined in code, and new agents are automatically discovered by a meta agent that programs increasingly better agents. This approach leverages the Turing Completeness of programming languages, enabling the learning of any possible agentic system, including novel prompts, tool use, control flows, and their combinations. The authors present a simple yet effective algorithm called Meta Agent Search, where a meta agent iteratively programs interesting new agents based on an ever-growing archive of previous discoveries.

What's next? The experiments conducted across multiple domains, including coding, science, and math, demonstrate that Meta Agent Search can progressively invent agents with novel designs that significantly outperform state-of-the-art hand-designed agents. Remarkably, these invented agents maintain superior performance even when transferred across domains and models, showcasing their robustness and generality. While the development of such systems must be done safely, this research highlights the potential of an exciting new direction in automatically designing increasingly powerful agentic systems.

Papers of the Week:

RAGChecker: A Fine-grained Framework for Diagnosing Retrieval-Augmented Generation

StructuredRAG: JSON Response Formatting with Large Language Models

LLM Pruning and Distillation in Practice: The Minitron Approach

Training Language Models on the Knowledge Graph: Insights on Hallucinations and Their Detectability

Flexora: Flexible Low Rank Adaptation for Large Language Models

Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone / Discover the New Multi-Lingual, High-Quality Phi-3.5 SLMs