📚 A New Direction for Neural Networks

From Multilayer Perceptrons to interpretable Kolmogorov-Arnold Networks

In this issue:

A potential alternative to MLPs

Not all layers are created equal

Going beyond single token prediction

1. KAN: Kolmogorov-Arnold Networks

What problem does it solve? Multi-Layer Perceptrons (MLPs) have been a fundamental building block in deep learning architectures for decades. However, despite their widespread use, MLPs have limitations in terms of accuracy and interpretability. The fixed activation functions on nodes in MLPs can restrict their ability to capture complex patterns and relationships in data. Additionally, the lack of interpretability in MLPs makes it challenging to understand how they arrive at their predictions, which is crucial in many domains such as healthcare and finance.

How does it solve the problem? Kolmogorov-Arnold Networks (KANs) address the limitations of MLPs by introducing learnable activation functions on edges instead of fixed activation functions on nodes. By replacing linear weights with univariate functions parametrized as splines, KANs achieve better accuracy with smaller network sizes compared to MLPs. The learnable activation functions allow KANs to capture more complex patterns and relationships in data. Moreover, KANs exhibit faster neural scaling laws, meaning they can achieve higher accuracy with fewer parameters compared to MLPs. The interpretability of KANs is enhanced by their ability to be intuitively visualized and easily interact with human users, enabling them to assist scientists in discovering mathematical and physical laws.

What's next? Further research can explore the application of KANs in various domains, such as computer vision, natural language processing, and reinforcement learning. The interpretability aspect of KANs can be leveraged to develop more transparent and explainable AI systems, which is crucial for building trust and accountability. Additionally, the collaboration between KANs and human experts in discovering mathematical and physical laws can lead to new insights and advancements in various scientific fields.

2. SPAFIT: Stratified Progressive Adaptation Fine-tuning for Pre-trained Large Language Models

Watching: SPAFIT (paper)

What problem does it solve? Fine-tuning large language models can be a challenging task due to the significant computational resources and storage required. Additionally, the Transformer architecture used in these models has been shown to suffer from catastrophic forgetting and overparameterization. Parameter-efficient fine-tuning (PEFT) methods have been developed to address these issues, but they typically apply adjustments across all layers of the model, which may not be the most efficient approach.

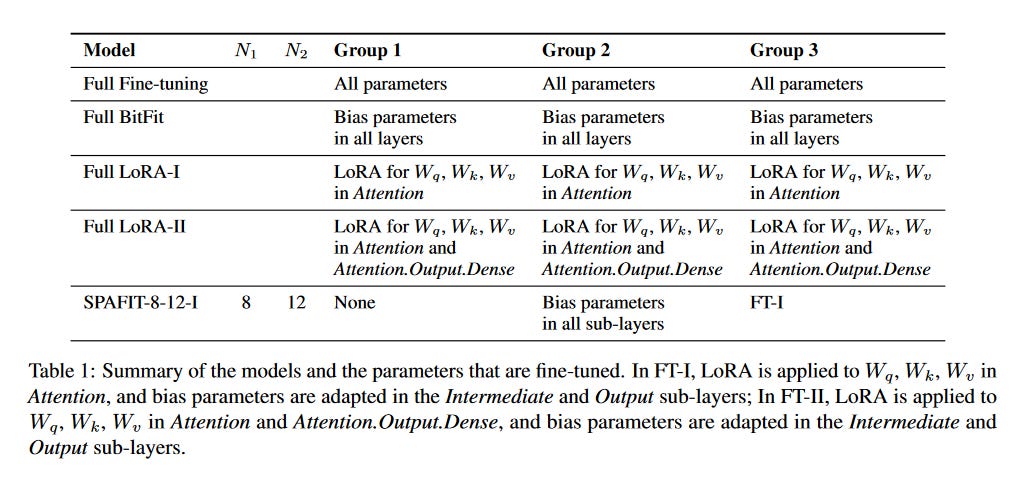

How does it solve the problem? Stratified Progressive Adaptation Fine-tuning (SPAFIT) is a novel PEFT method that takes advantage of the localization of different types of linguistic knowledge within specific layers of the model. By strategically focusing on these layers, SPAFIT can achieve better performance while fine-tuning only a fraction of the parameters compared to other PEFT methods. This targeted approach not only reduces the computational resources needed but also helps to mitigate the issues of catastrophic forgetting and overparameterization.

What's next? The success of SPAFIT on the GLUE benchmark tasks demonstrates the potential of this method for efficient fine-tuning of large language models. Further research could explore the application of SPAFIT to other tasks and datasets, as well as investigating the specific linguistic knowledge localized in different layers of the model.

3. Better & Faster Large Language Models via Multi-token Prediction

Watching: Multi-token Prediction (paper)

What problem does it solve? Language Models (LMs) are usually trained using a next-token prediction objective, meaning that at each step, the model tries to predict the next token in the sequence. This approach, while effective, may not be the most efficient way to learn from the training data. By only predicting one token at a time, the model might miss out on learning longer-term dependencies and patterns in the data.

How does it solve the problem? The proposed method trains the model to predict multiple future tokens at once, using independent output heads that operate on top of a shared model trunk. By considering multi-token prediction as an auxiliary training task, the model can learn more efficiently from the same amount of data. This approach leads to improved downstream capabilities without increasing the training time. The benefits are more pronounced for larger model sizes and generative tasks like coding, where the multi-token prediction models consistently outperform strong baselines.

What's next? The success of multi-token prediction in improving sample efficiency and generative capabilities opens up new avenues for further research. It would be interesting to explore how this approach scales with even larger model sizes and different types of data, such as multilingual corpora or domain-specific datasets. Additionally, the improved inference speed of models trained with multi-token prediction could have significant implications for real-world applications where low latency is crucial, such as chatbots or real-time translation systems.

Papers of the Week:

BiomedRAG: A Retrieval Augmented Large Language Model for Biomedicine

Small Language Models Need Strong Verifiers to Self-Correct Reasoning

Automated Data Visualization from Natural Language via Large Language Models: An Exploratory Study